Tribute to Daniel Kahneman: Insights and Memories from Superforecasters

“Superforecasters are better than others at finding relevant information—possibly because they are smarter, more motivated, and more experienced at making these kinds of forecasts… Superforecasters are less noisy than…even trained teams.”

—Kahneman, Sibony, & Sunstein, in Noise: A Flaw in Human Judgment

“For as long as I have been running forecasting tournaments, I have been talking with Daniel Kahneman about my work. For that, I have been supremely fortunate. … Talking to Kahneman can be a Socratic experience: energizing as long as you don’t hunker down into a defensive crouch. So in the summer of 2014, when it was clear that superforecasters were not merely superlucky, Kahneman cut to the chase: ‘Do you see them as different kinds of people, or as people who do different kinds of things?’ My answer was, ‘A bit of both.’”

—Phil Tetlock in Superforecasting: The Art and Science of Prediction

Daniel Kahneman, Nobel Prize winner and a friend of Good Judgment, died aged 90 on 27 March 2024. He was a true pioneer in the realms of judgment, decision-making, and the psychological underpinnings that affect our forecasting work. Beyond his monumental contributions to psychology and economics, Kahneman took a keen interest in the art and science of Superforecasting, discussing Superforecasters in his last published book Noise and gracing us with his presence at a Good Judgment workshop. In this tribute to Daniel Kahneman, Superforecasters share insights and memories on how his work influenced and enriched them.

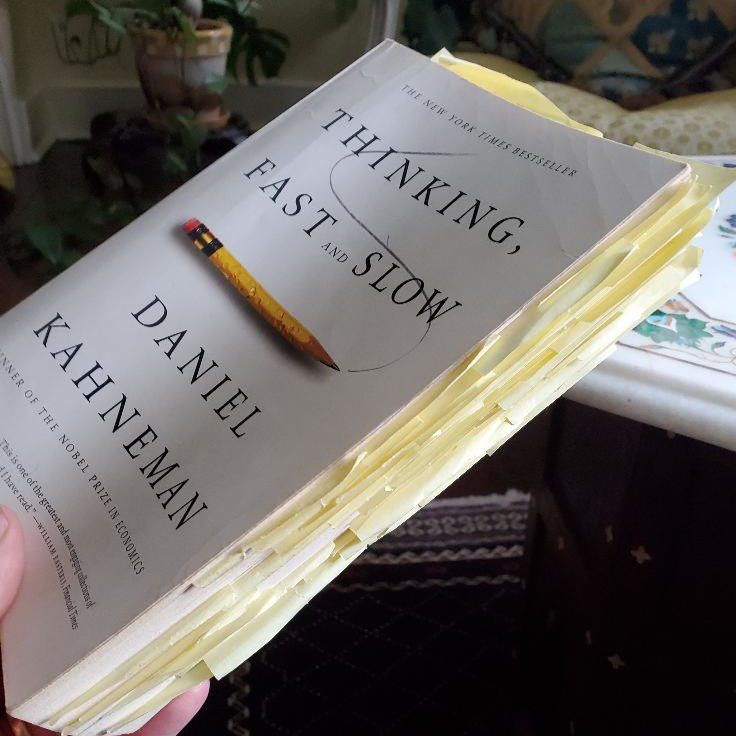

Superforecaster Dan Mayland:

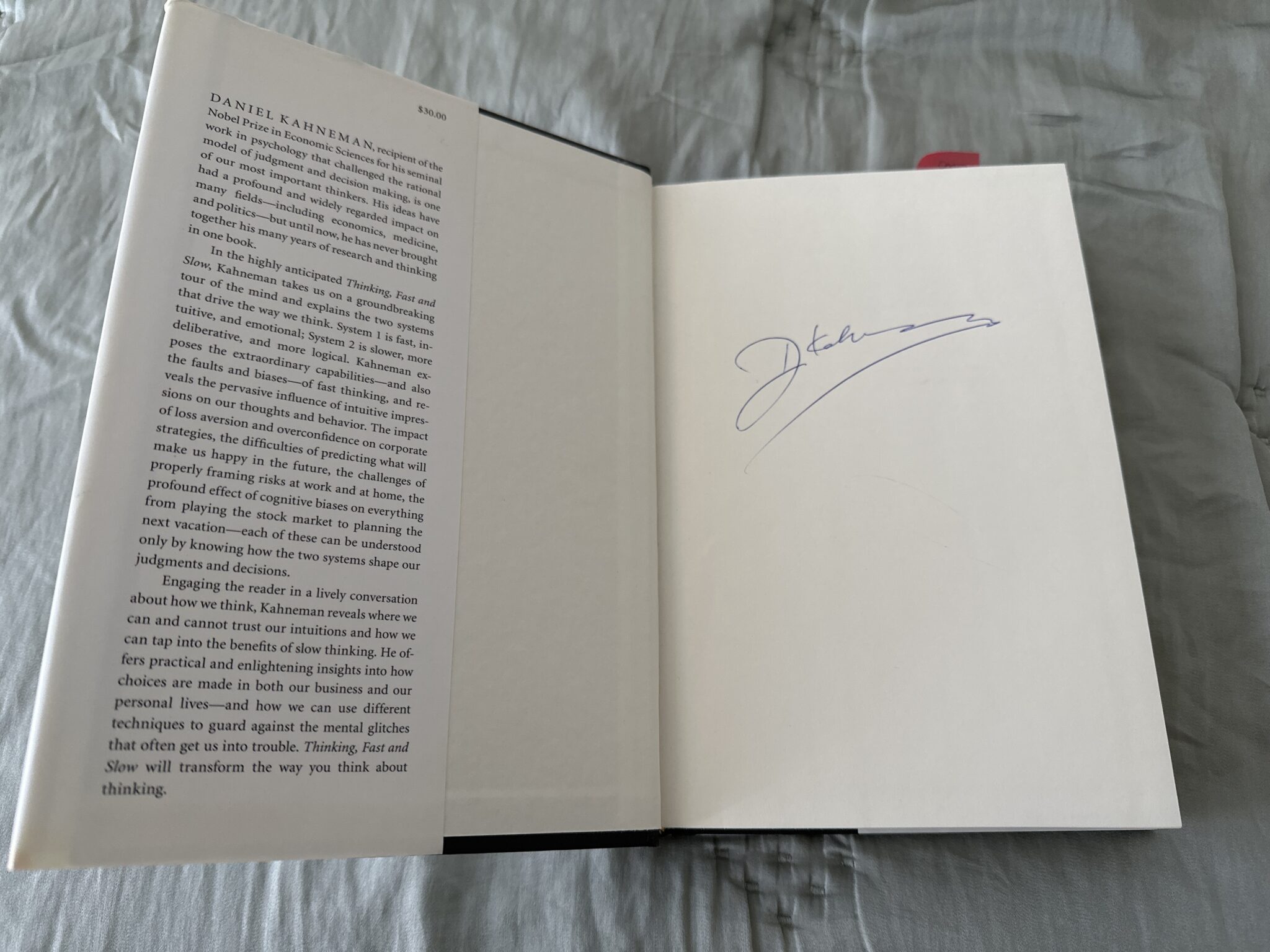

The best tribute I can give is to share a photo of my copy of Thinking, Fast and Slow, which I read around the same time I started forecasting. I have this (slightly!) obsessive habit of affixing small Post-It notes to book passages that I think are particularly insightful and don’t want to lose track of once I turn the page. (Even though, the mind being what it is, I do.) It’s my version of taking pictures of sunsets in an attempt to preserve the memory. The photo below speaks to the level of insight I thought Kahneman had to offer.

Co-founder and CEO Emeritus of Good Judgment Inc, Terry Murray:

In the early days of Good Judgment Inc, I had the privilege of being in an extended conversation that included our co-founders Phil Tetlock and Barb Mellers along with Danny Kahneman and a couple of his colleagues. I expected Danny to have great questions and insights on the substantive side of our forecasting work because of his own research interests and his past interest in the Good Judgment Project itself. What surprised me most at the time was that Danny’s questions about the business side of our fledgling company were among the best questions anyone had ever posed to me.

Looking back now, I shouldn’t have been surprised. The handful of opportunities I had to interact directly with Danny all confirmed what his writings on judgment and decision-making tell us: Danny was a master at cutting to the chase. Whatever the topic under discussion, he would quickly filter out the noise and hone in on the signal. If I could create the ideal AI personal assistant, it would be one that would pose Danny-like questions to challenge me until I too filtered out the noise and focused on what is most important. I’d like to think that Danny would approve.

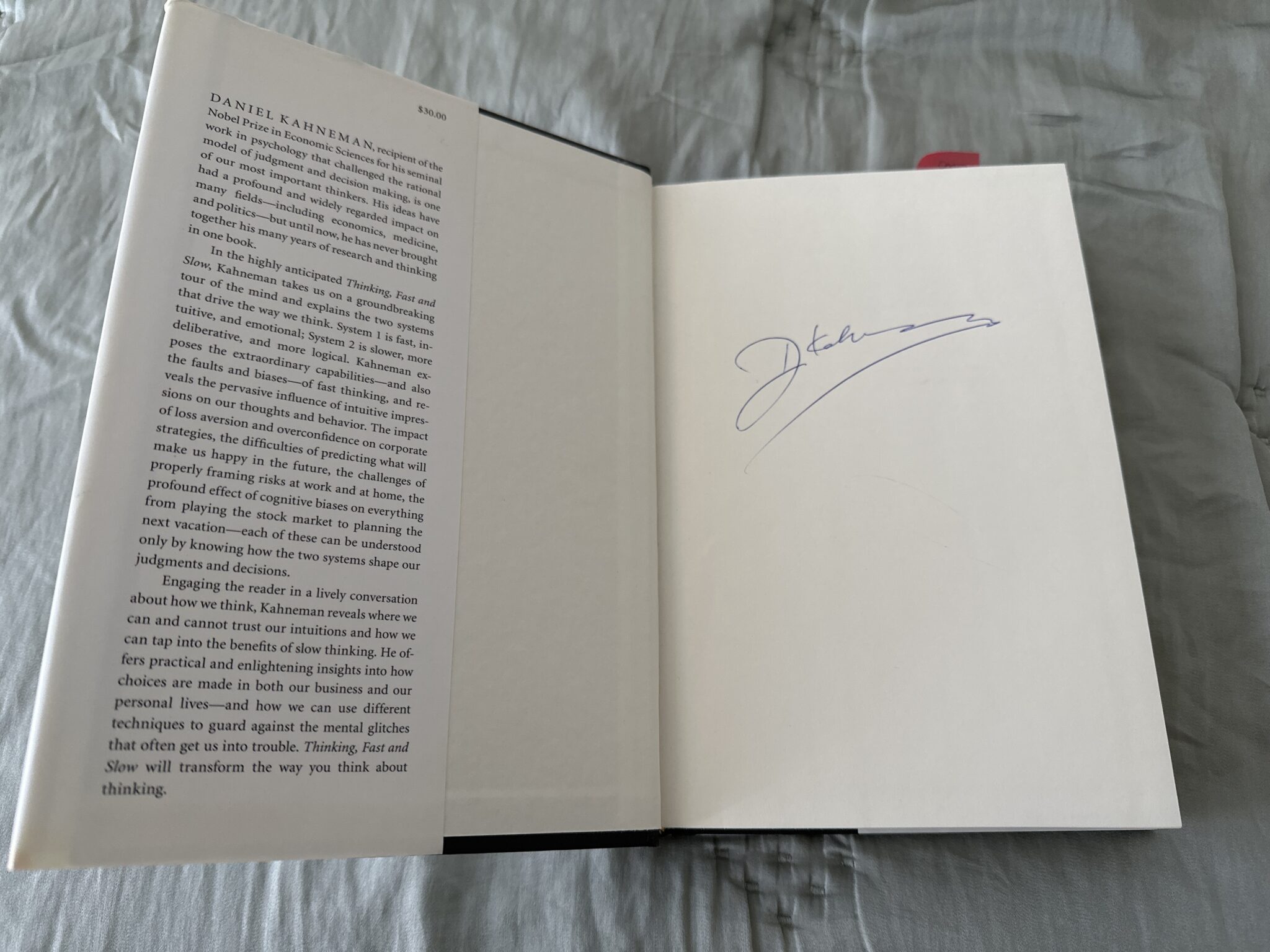

Superforecaster and CEO of Good Judgment Inc, Warren Hatch:

A while back, we ran a “Noise Challenge” on Good Judgment Open that was inspired by the launch of the book Daniel Kahneman co-wrote. He generously agreed to sign copies that we could present to the top performers at the end of the competition. So I went by his house to meet him in the lobby. I figured it would take five minutes of his time tops. Instead, we sat down and spent the better part of an hour going over some of the questions then posed to the Superforecasters. It was a masterclass.

Superforecaster Giovanni Ciriani:

I read Thinking, Fast and Slow in early 2013; on 21 March 2013, David Brooks wrote the NY Times column “Forecasting Fox,” which was centered on Tetlock and the Good Judgment Project. It credited Kahneman’s Thinking, Fast and Slow as training material. I read the column uniquely because the book was mentioned. On 22 March 2013, I signed up for the GJP. Of course, one could play counterfactuals, to see which factor was more important in my joining, but I see Kahneman’s as the sine qua non, the condition without which it would not have happened.

Superforecaster and GJ Director Ryan Adler:

I will ashamedly confess that my knowledge of Danny Kahneman’s work was limited before I found myself in the Good Judgment Project, but immersing myself in it was and is an absolute pleasure. To borrow some phrasing from Robert Heinlein, Kahneman and Tversky studied what man is, not what they wanted him to be, and it is saddening to know that there will be less of that kind of thinking with his passing.

Superforecaster Vijay Karthik:

I have learnt a lot by reading articles regarding him and through his book. Life has become a lot more enriched trying to put his recommendations into action, to the best extent possible.

Superforecaster David Fisher:

I remember listening to him on a podcast. He was pessimistic that information alone could change opinions about global warming. He said only if someone identified with the person giving the information were they likely to consider it. I have read Thinking, Fast and Slow and most of Noise and have nothing but respect. Decisions only made because of someone’s economic interests? He disproved that. Deserved the Nobel.

Superforecaster Dwight Smith:

His influence on my life has been tremendous. It was through his work that I became a far better forecaster. And that has led to a multitude of adventures and good works. To be so profoundly influenced by such a great mind even once in a lifetime is quite a gift.