Superforecasting® the Fed: A Look Back over the Last 6 Months

The Federal Reserve’s target range for the federal funds rate is the single most important driver in financial markets. Anticipating inflection points in the Fed’s policy has immense value, and Good Judgment’s Superforecasters have been beating the futures markets this year, signaling the Fed would continue to hike until the June pause while markets and experts alike flipflopped on their calls.

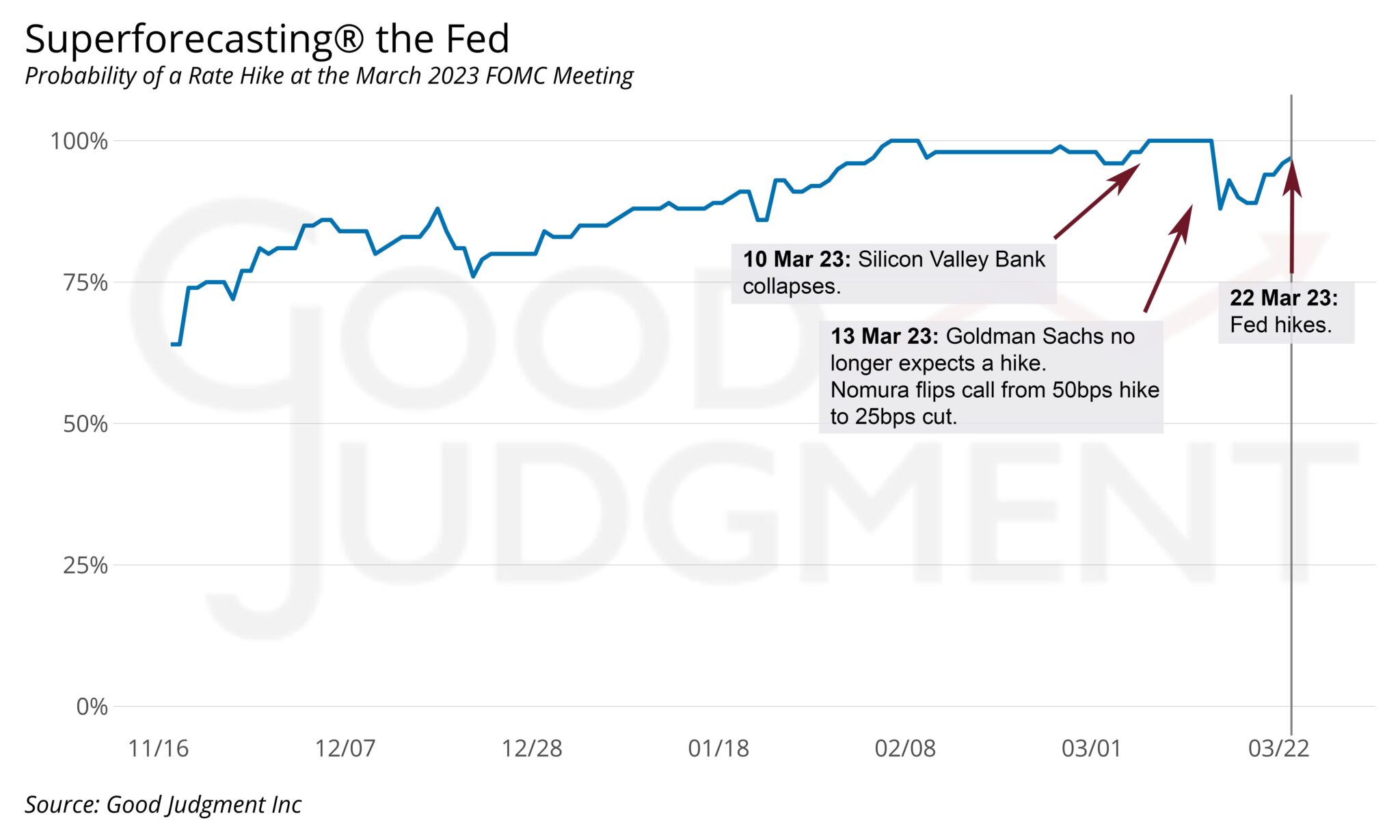

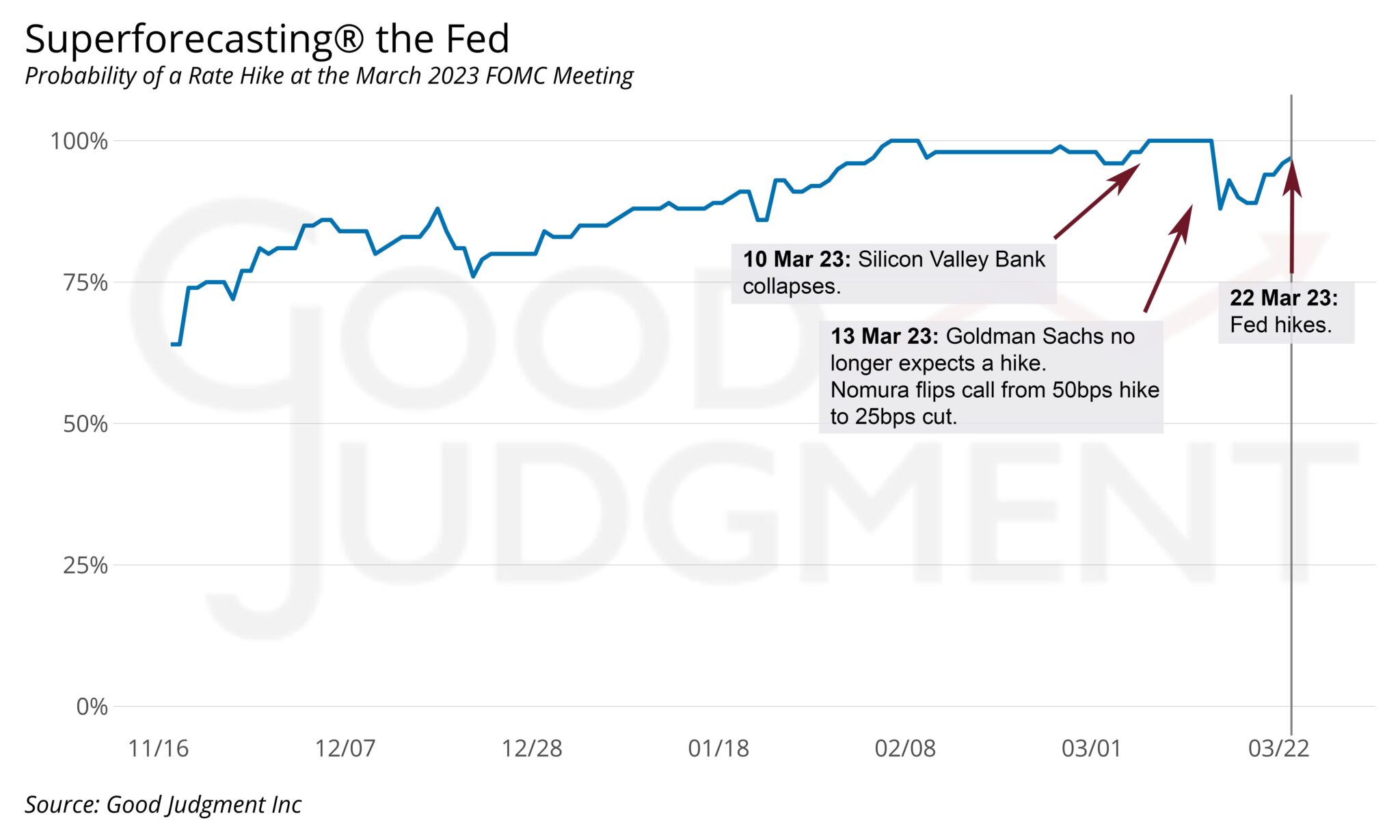

- Ahead of the Fed’s March meeting, when Silicon Valley Bank went under, the futures markets priced out a hike and began flirting with a possibility of a cut as early as summer. Leading market observers like Goldman Sachs said the Fed would pause, and Nomura said they would start to cut at that meeting.

- Then the futures markets priced in a pause for the May meeting. Experts like Pimco’s former chief economist Paul McCulley also prematurely predicted that the Fed would go on hold. As the date of the meeting approached, the futures markets—as well as most market participants—came to share the Superforecasters’ view that another hike was in the cards, but lagged behind the Superforecasters by nearly a month.

- In the weeks heading into the June meeting, the futures were oscillating between a pause, a hike, and possibly even a cut. A stream of stronger economic data led experts such as Mohamed El-Erian to forecast that the Fed would continue to raise rates for at least another meeting and perhaps longer. Not the Superforecasters. They have been saying since 2 April 2023 that the Fed would most likely hit pause—a view that, once again, eventually became the consensus.

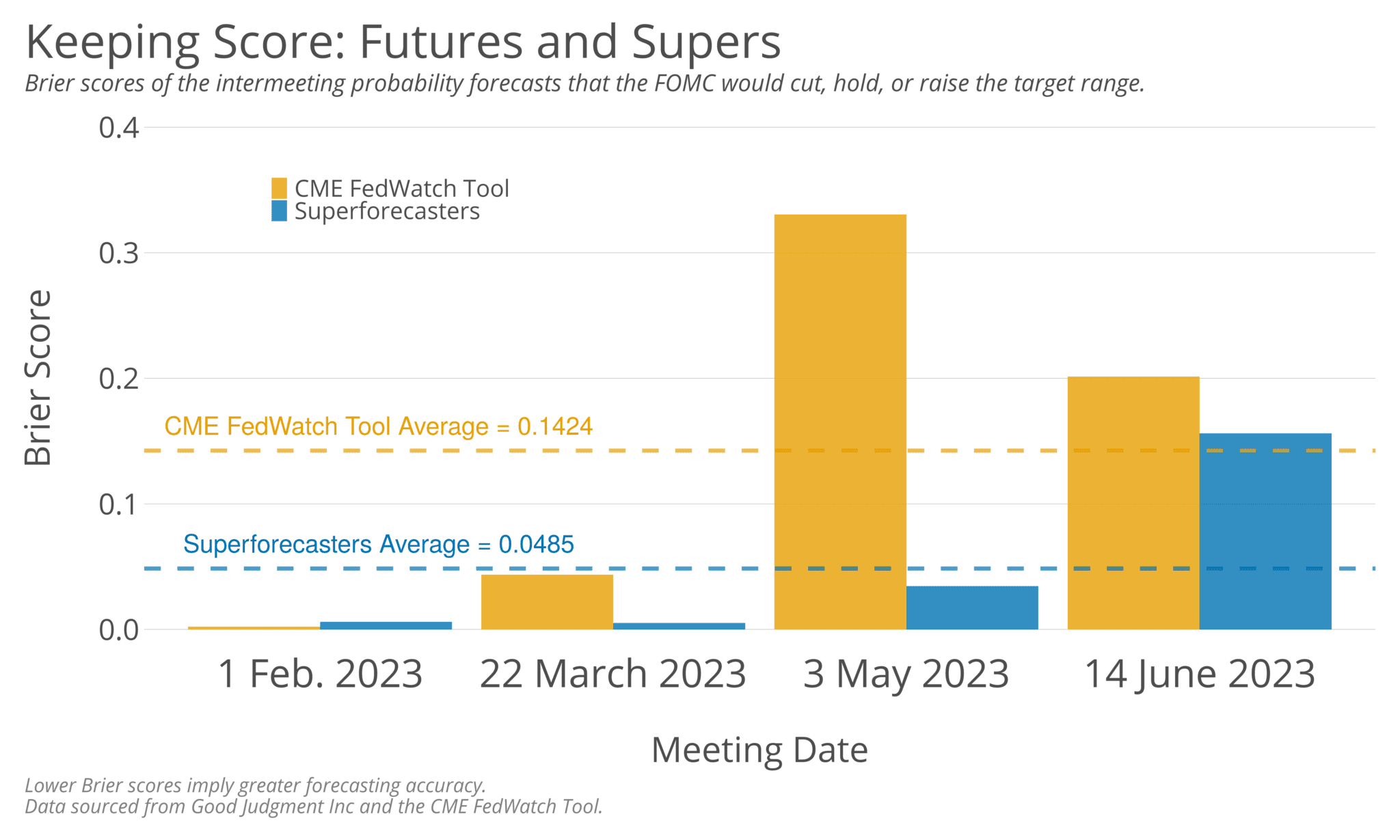

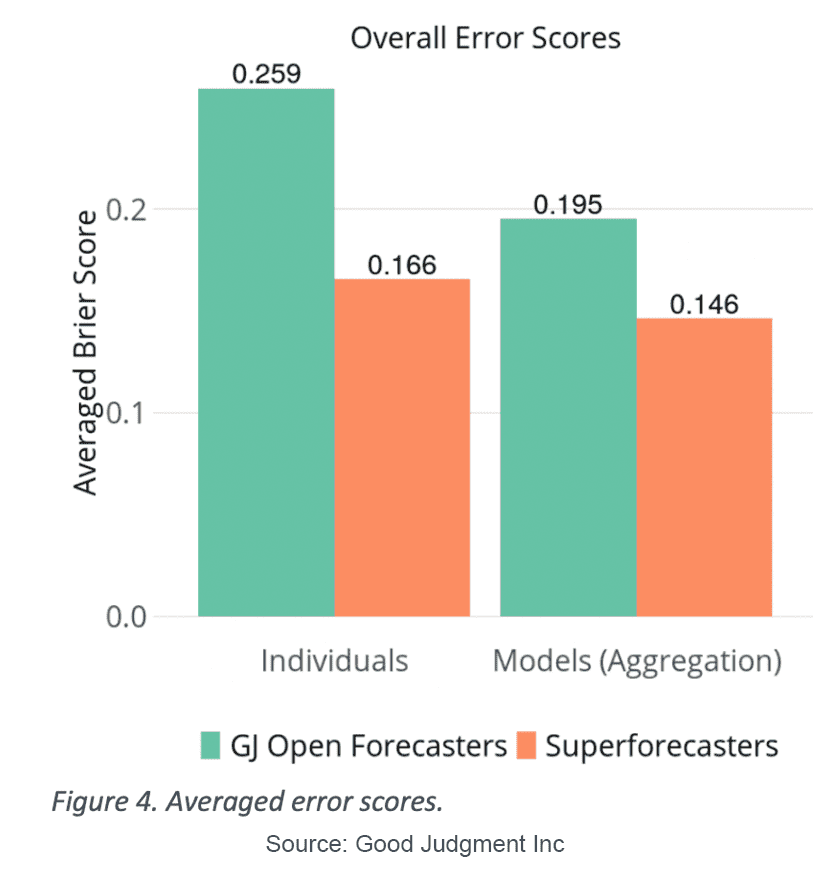

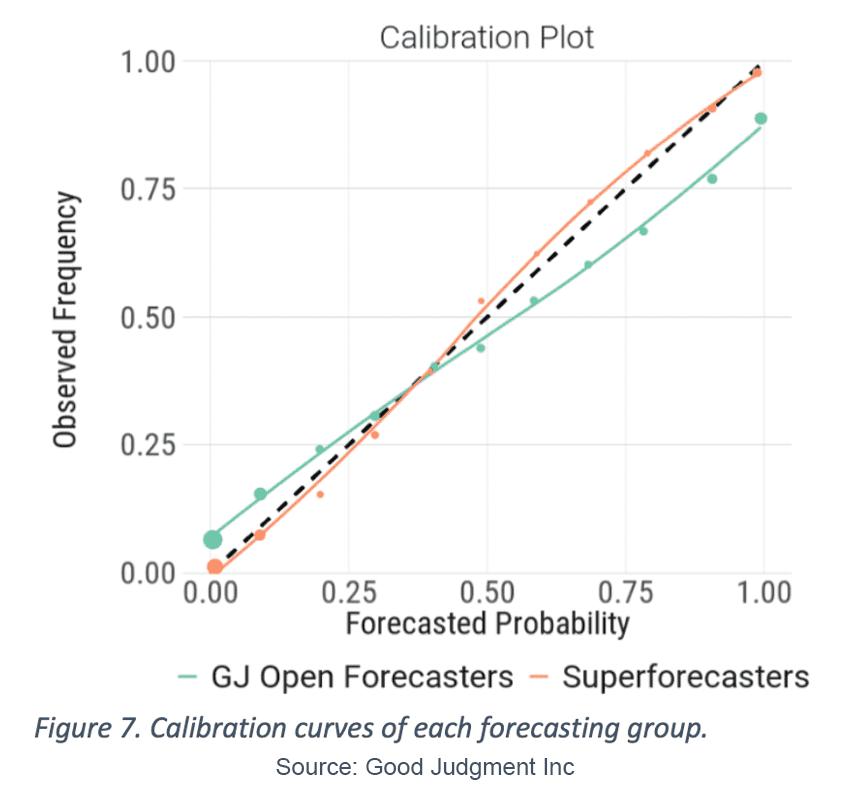

When comparing the forecasts of two groups—Good Judgment’s Superforecasters and the futures markets using the CME FedWatch Tool—for the last four Federal Reserve meetings, the Superforecasters assigned higher probabilities to the correct outcome. They were 66% more accurate than the futures (as measured by Brier scores) and had lower noise in their forecasts (as measured by standard deviation).

See our new whitepaper for details. We also provide subscribers with a full summary of all our active Fed forecasts, which is updated before and after each meeting (available on request).

Good Judgment’s Superforecasters have been providing a clear signal on the Fed’s policy well before the futures and many market participants. Subscribers to FutureFirst™ have 24/7 access to evolving forecasts by the Superforecasters on questions that matter, including Fed policy through the rest of the year and beyond, along with a rich cross-section of other questions crowd-sourced directly from users, including questions on Ukraine, China, and the upcoming US elections.