In 2012, the Good Judgment Project research team made a big bet that five 12-person teams of elite forecasters could make more accurate forecasts than any other participants in the IARPA ACE competition. We were right.

The Good Judgment Project as a whole was the runaway winner of the ACE competition. And the Superforecasters, even without sophisticated aggregations, produced forecasts that were virtually indistinguishable from the results of Good Judgment’s best aggregation algorithm, which incorporated data from 1,200 forecasters in addition to the Superforecasters.

The simple median of their forecasts was 35% to 72% more accurate than any other research team in the competition!

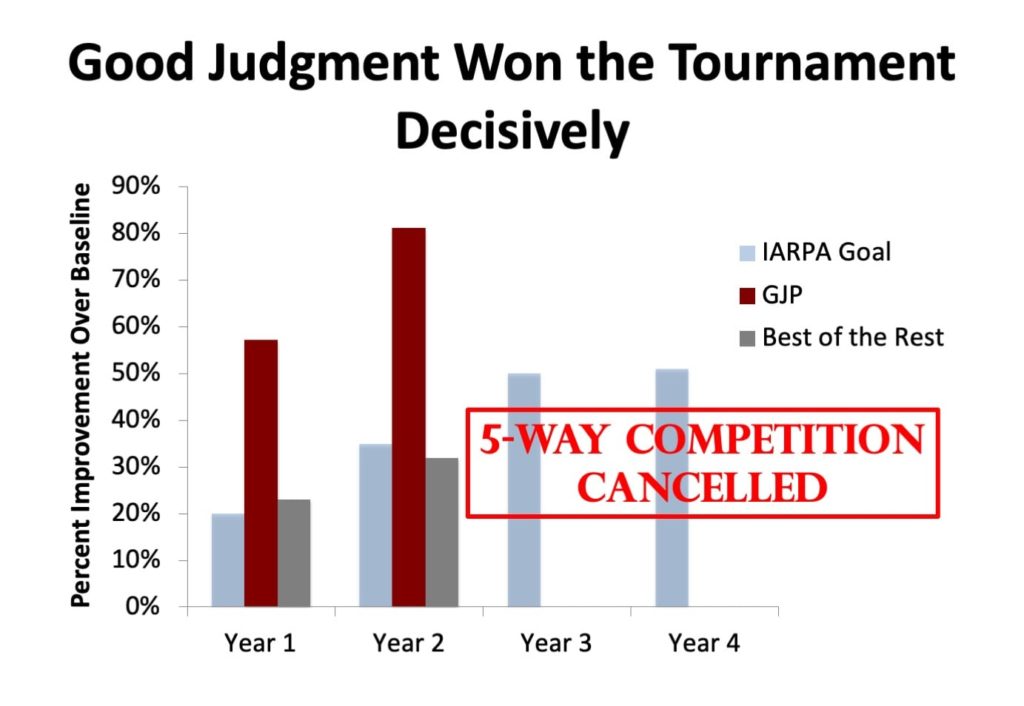

Putting the Superforecasters together on elite teams boosted the Good Judgment Project’s already superior accuracy. The comparison chart below shows that Good Judgment’s lead over all other competitors increased substantially after we created the Superforecaster teams in Year 2 of the ACE competition. Good Judgment’s performance was so strong that IARPA decided to end the competition at that point, with Good Judgment being the only research team to continue for the final two years of the program.

To learn more about how the Superforecasters led the Good Judgment Project to victory in the ACE competition, see Mellers et al. (2014), Psychological strategies for winning a geopolitical forecasting tournament, Psychological Science, 25(5), 1106-1115.

“Team Good Judgment, led by Philip Tetlock and Barbara Mellers of the University of Pennsylvania, beat the control group by more than 50%. This is the largest improvement in judgmental forecasting accuracy observed in the literature.”

Steven Rieber, Program Manager, IARPASchedule a consultation to learn how our FutureFirst monitoring tool, custom Superforecasts, and training services can help your organization make better decisions.