This project focused on individual reviews of Joseph Carlsmith’s paper, “Is power-seeking AI an existential risk?,” and featured forecasts on a dozen key questions about risks related to Artificial Intelligence (AI) and Artificial General Intelligence (AGI). It ran from August to October 2022, with a follow-up round in spring 2023, and was made possible with generous support from Open Philanthropy.

The project was divided into two phases.

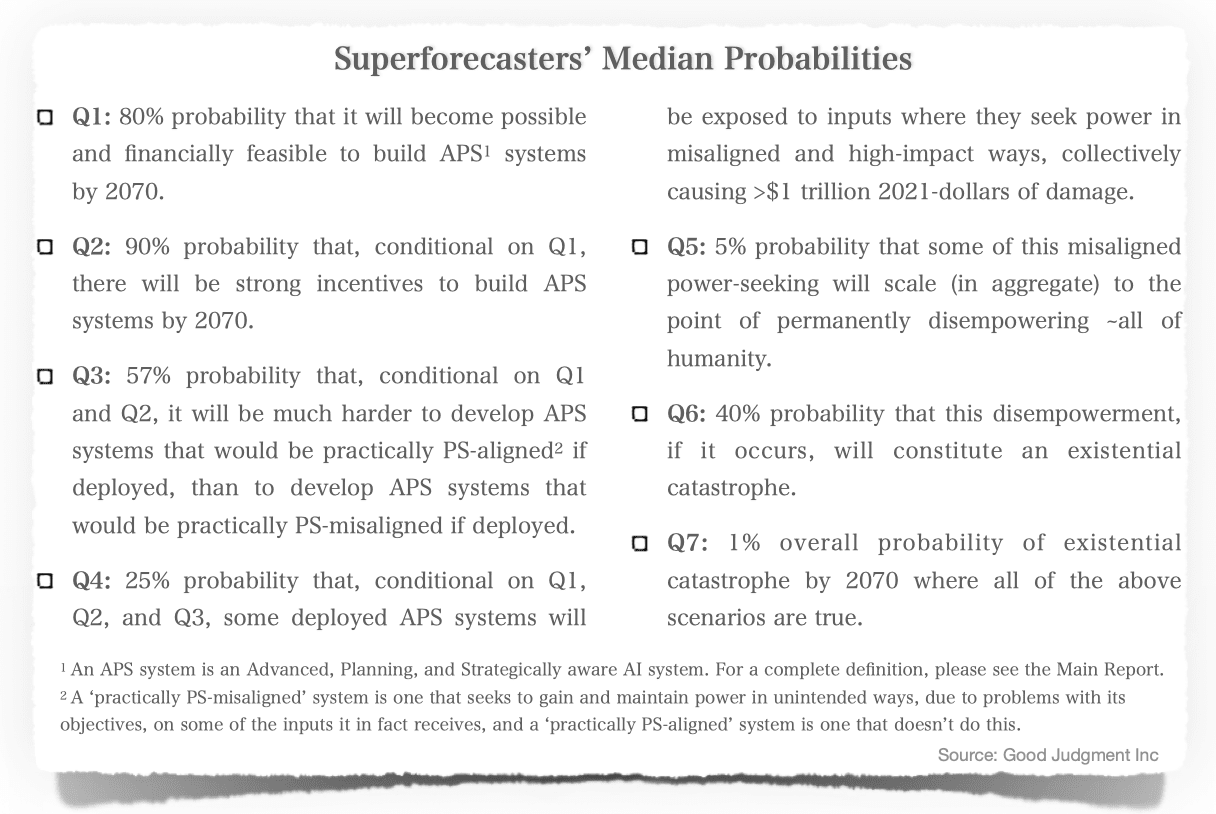

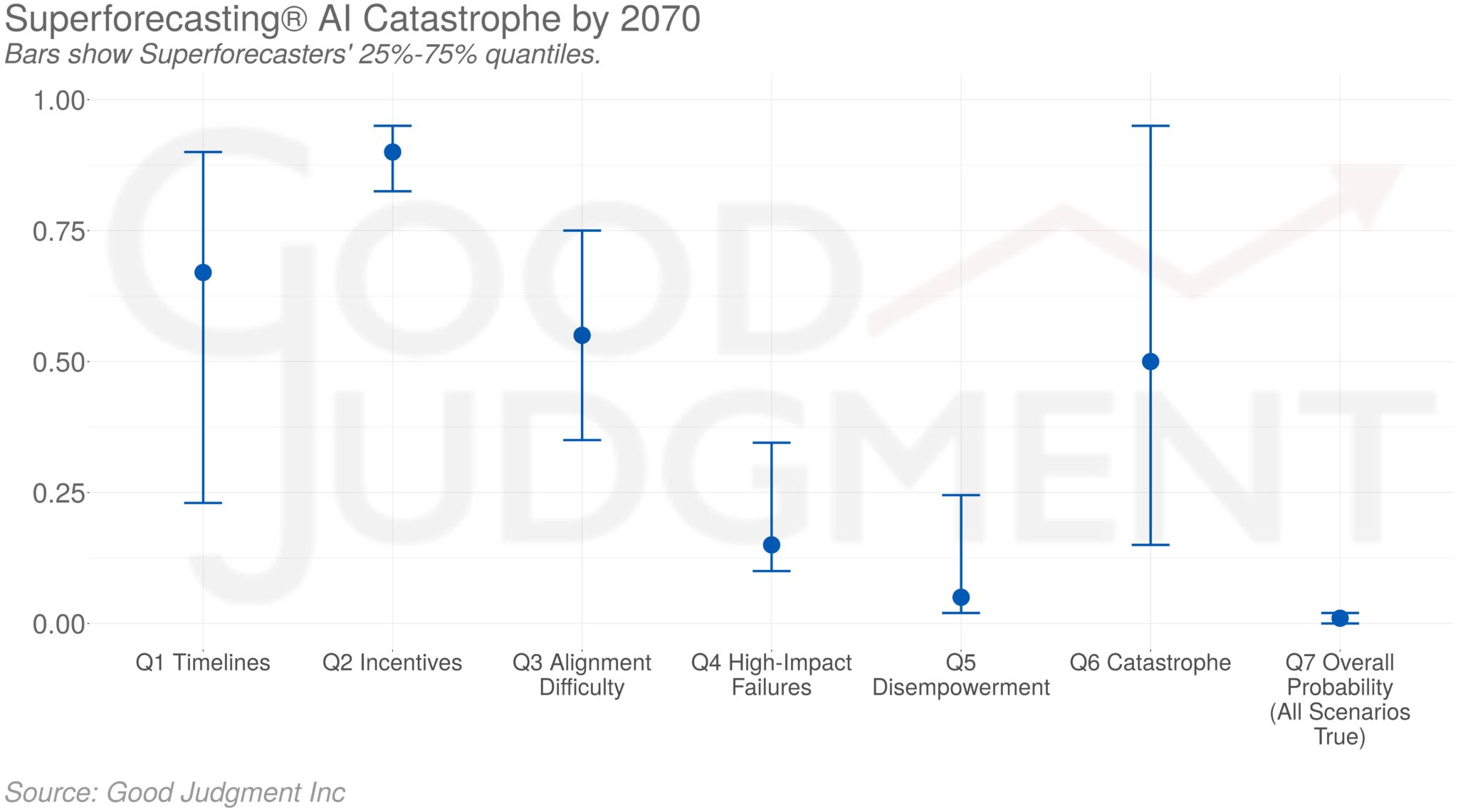

Phase I, the Main Project, entailed an in-depth evaluation of Joseph Carlsmith’s paper. Good Judgment’s professional Superforecasters were asked to complete a standardized survey, which had also been distributed to a panel of AI experts. Good Judgment’s panel of Superforecasters was subdivided into two groups: those who self-identified as experts in AI and those who did not.

At the initial stage, 21 Superforecasters—10 Superforecasters with expertise on AI and 11 generalists without expertise on AI—worked individually to review the paper and answer a detailed questionnaire. Three Superforecasters preferred not to disclose their individual reviews; the others are provided in the links below. Following that stage, these 21 Superforecasters were joined by 12 colleagues and collaborated as a forecasting team on Good Judgment’s private platform to refine and update their judgments on the forecast questions. All of those reports are provided below.

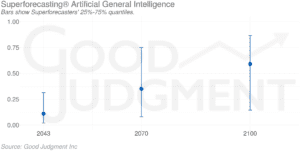

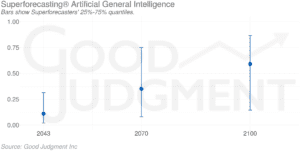

In Phase II, a Supplementary Project, the Superforecasters provided forecasts on the following four questions:

(1) Will AGI exist by 1 January 2043?

(2) Will AGI exist by 1 January 2070?

(3) Will AGI exist by 1 January 2100?

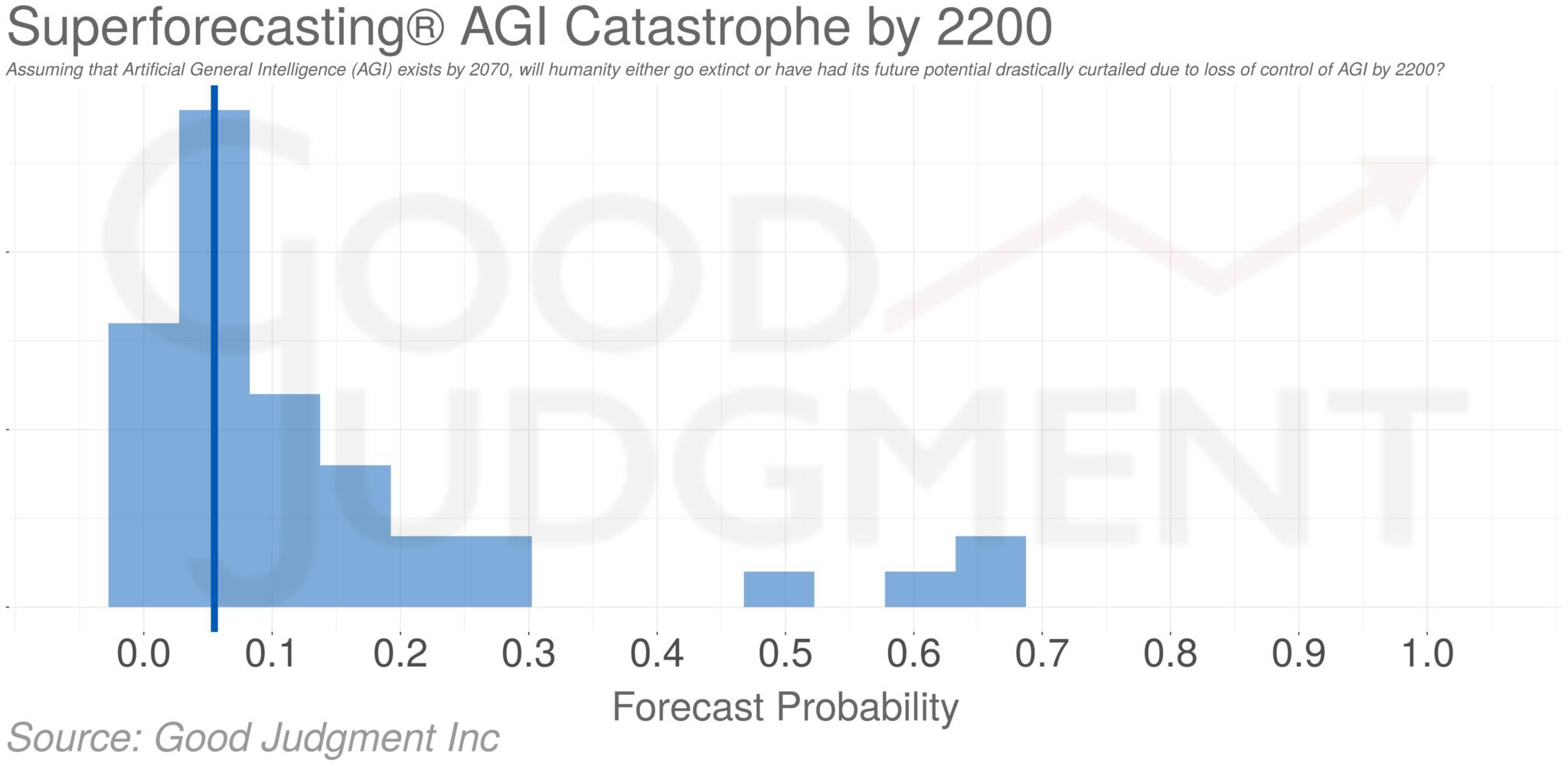

(4) Assuming that AGI exists by 2070, will humanity either go extinct or have had its future potential drastically curtailed due to loss of control of AGI by 2200?

The reports below present the forecasts alongside the key drivers and risks identified in each phase of the project.

Summary Reports

Supporting Materials for Phase I: Main Project

Individual Reviews

Superforecaster Reports

Supporting Materials for Phase II: Supplementary Project

Superforecaster Reports

Schedule a consultation to learn how our FutureFirst monitoring tool, custom Superforecasts, and training services can help your organization make better decisions.