Books on Making Better Decisions: Good Judgment’s Back-to-School Edition

Since the publication of Tetlock and Gardner’s seminal Superforecasting: The Art and Science of Prediction, many books and articles have been written about the ground-breaking findings of the Good Judgment Project, its corporate successor Good Judgment Inc, and the Superforecasters.

Since the publication of Tetlock and Gardner’s seminal Superforecasting: The Art and Science of Prediction, many books and articles have been written about the ground-breaking findings of the Good Judgment Project, its corporate successor Good Judgment Inc, and the Superforecasters.

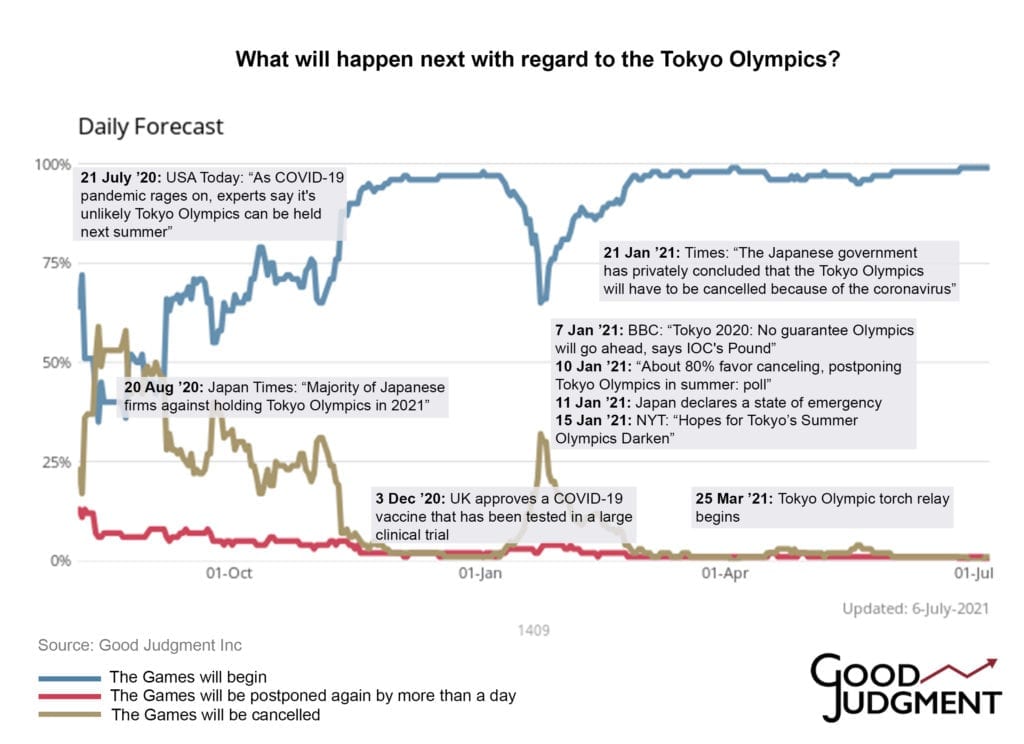

This is not surprising: Decision-makers have a lot to learn from the Superforecasters. Thanks to being actively open-minded and unafraid to rethink their conclusions, the Superforecasters have been able to make accurate predictions where experts often failed. They know how to think in probabilities (or “in bets”), reduce the noise in their judgments, and mitigate cognitive biases such as overconfidence. As Tetlock and Good Judgment Inc have shown, these are skills that can be learned.

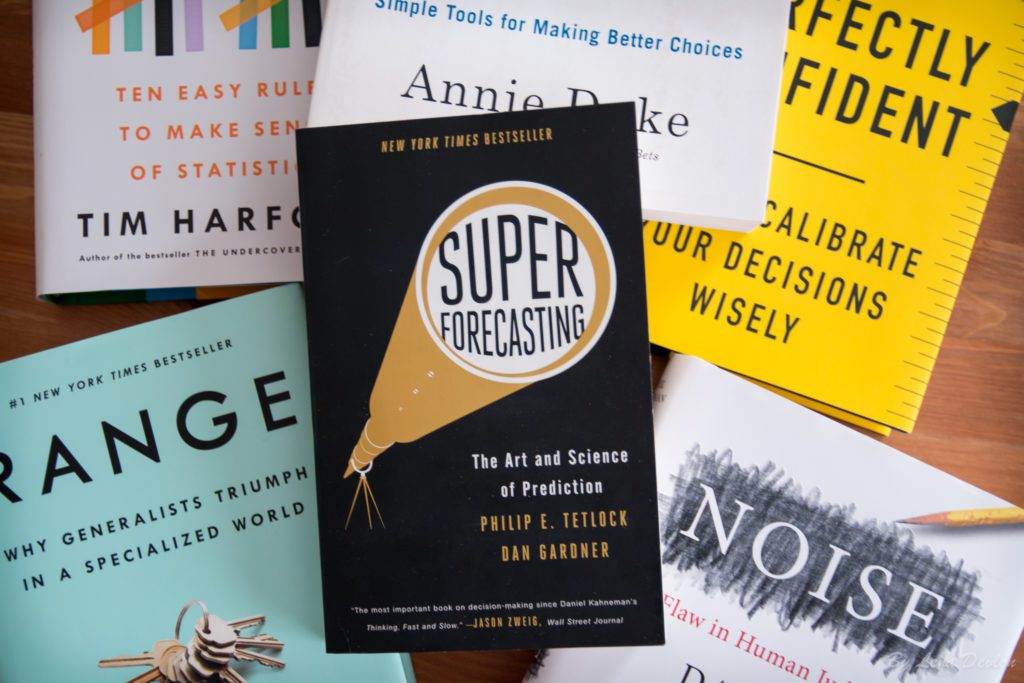

Here is a short list of eight notable books that present a wealth of information on ways to evaluate an uncertain future and improve decision-making.

- Superforecasting: The Art and Science of Prediction by Philip E. Tetlock and Dan Gardner (2015)

In 2011, IARPA—the research arm of the US intelligence community—launched a massive competition to identify cutting-edge methods to forecast geopolitical events. Four years, 500 questions, and over a million forecasts later, the Good Judgment Project (GJP)—led by Philip Tetlock and Barbara Mellers at the University of Pennsylvania—emerged as the undisputed victor in the tournament. GJP’s forecasts were so accurate that they even outperformed those of intelligence analysts with access to classified data. One of the biggest discoveries of GJP were the Superforecasters: GJP research found compelling evidence that some people are exceptionally skilled at assigning realistic probabilities to possible outcomes—even on topics outside their primary subject-matter training.

In their New York Times bestseller, Superforecasting, our cofounder Philip Tetlock and his colleague Dan Gardner profile several of these talented forecasters, describing the attributes they share, including open-minded thinking, and argue that forecasting is a skill to be cultivated, rather than an inborn aptitude.

- Noise: A Flaw in Human Judgment by Daniel Kahneman, Olivier Sibony, and Cass R. Sunstein (2021)

Noise, defined as unwanted variability in judgments, can be corrosive to decision-making. Yet, unlike its better-known companion, bias, it often remains undetected—and therefore unmitigated—in decision processes. In addition to research-based insights into better decision-making and remedies to identify and reduce noise as a source of error, Kahneman and his colleagues take a close look at a select group of forecasters—the Superforecasters—whose judgments are not only less biased but also less noisy than those of most decision-makers. As co-author of Noise Cass Sunstein says, “Superforecasters are less noisy—they don’t show the variability that the rest of us show. They’re very smart; but also, very importantly, they don’t think in terms of ‘yes’ or ‘no’ but in terms of probability.”

- Think Again: The Power of Knowing What You Don’t Know by Adam Grant (2021)

Intelligence is often seen as the ability to think and learn, but in a rapidly changing world, there’s another set of cognitive skills that might matter more: the ability to rethink and unlearn. As an organizational psychologist, Adam Grant investigates how we can embrace the joy of being wrong, bring nuance to charged conversations, and build schools, workplaces, and communities of lifelong learners. He also profiles Good Judgment Inc’s Superforecasters Kjirste Morrell and Jean-Pierre Beugoms, who embody the outstanding thought processes suggested in the book. You can read more about Morrell and Beugoms in our interviews here.

- Range: Why Generalists Triumph in a Specialized World by David Epstein (2019)

David Epstein examines the world’s most successful athletes, artists, musicians, inventors, and forecasters to show that in most fields—especially those that are complex and unpredictable—generalists, not specialists, are primed to excel. In a chapter about the failure of expert predictions, he discusses Phil Tetlock’s research, the GJP, and how “a small group of foxiest forecasters—just bright people with wide-ranging interests and reading habits—destroyed the competition” in the IARPA tournament. Good Judgment Inc’s Superforecasters Scott Eastman and Ellen Cousins, profiled in the book, weigh in on such topics as curiosity, aggregating perspectives, and learning from specialists without being swayed by their often narrow worldviews.

Other books that mention Superforecasting, Good Judgment Inc, or Good Judgment Project

- The Data Detective: Ten Easy Rules to Make Sense of Statistics by Tim Harford (2021) [* Also known, outside of North America, as How to Make the World Add Up: Ten Rules for Thinking Differently About Numbers (2020)]

- How to Decide: Simple Tools for Making Better Choices by Annie Duke (2020)

- Perfectly Confident: How to Calibrate Your Decisions Wisely by Don A. Moore (2020)

- Thinking in Bets: Making Smarter Decisions When You Don’t Have All the Facts by Annie Duke (2018)