Good Judgment Inc: A Year in Review

From our headquarters in Manhattan to Canada to Brazil and points in between, the Good Judgment team had a productive and exciting year in 2021. Here are some of the key developments and projects we worked on in the past year.

FutureFirst™ Launched

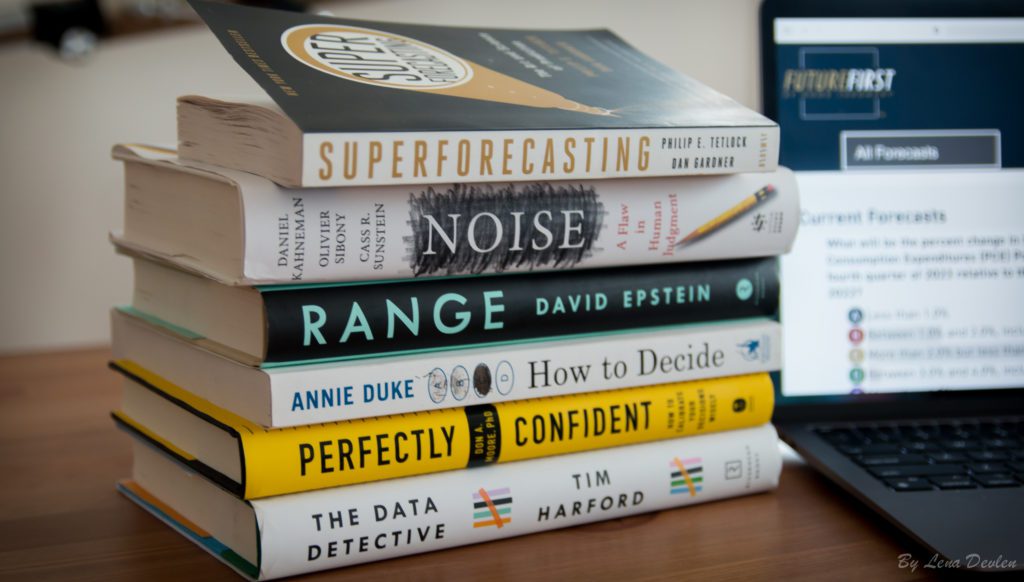

One of the biggest additions to Good Judgment’s spectrum of services in 2021 was the launch of FutureFirst. FutureFirst is a client-driven subscription platform that gives our user community unlimited access to all Good Judgment’s subscription Superforecasts.

In many ways, FutureFirst is a consolidation of our scientific experiments and several years of successful client engagements. We designed FutureFirst to

-

- offer clients one-click access to the collective wisdom of our international team of Superforecasters—to their predictions, rationales, and sources;

- enable easy monitoring of the Superforecasters’ predictions on a wide range of topics (economy and finance, geopolitics, environment, technology, health, and more); and

- allow clients to nominate and upvote new questions that matter to their organization so that the topics are crowd-sourced from the community of clients directly.

With the addition of the Class of 2022 Superforecasters, Good Judgment now works with more than 180 professional Superforecasters. They reside on every continent except Antarctica and have been identified through a rigorous process to join the world’s most accurate forecasters.

There are currently some 80 active forecasts on FutureFirst, with new questions being added nearly every week. Taken together, the forecasts on the platform paint the big picture of global risk with accuracy not found anywhere else.

Improving Ways of Eliciting and Aggregating Forecasts

At the same time, we continue to crowdsource other ideas to enhance the value of our service for clients. In response to user feedback and innovations by our data science team, we:

-

- now offer “continuous forecasts” so that clients can have a target forecast number as well as probabilities distributed across ranges;

- provide “rolling forecasts” on a custom basis with predictions that automatically advance each day so that the time horizon is fixed—for instance, the probability of a recession in the next 12 months;

- will be launching API access shortly for clients to have a data feed directly into their models.

Superforecasters in the Media

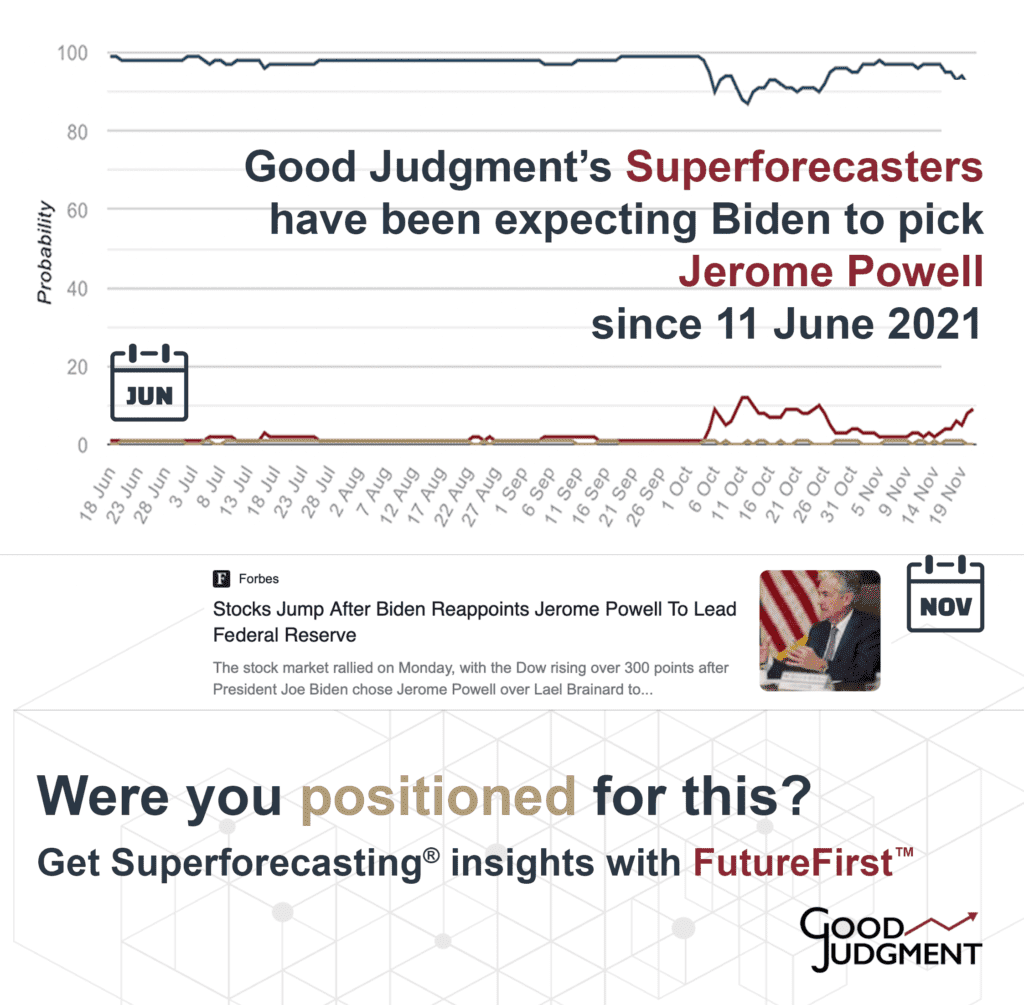

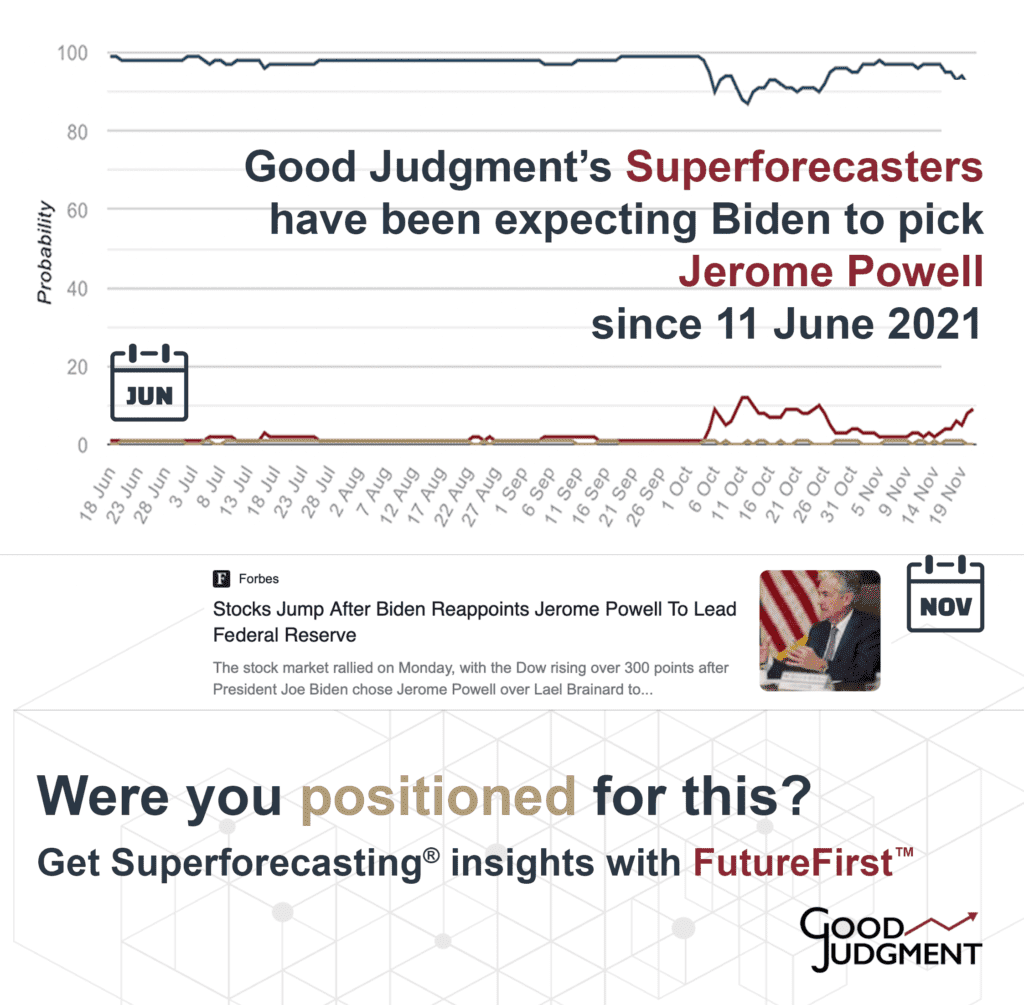

From questions about the Tokyo Olympics to the renomination of Jerome Powell to our early forecasts about Covid-19 that were closed and scored in the past year, the year 2021 offered many examples of Good Judgment’s Superforecasters providing early and accurate insights. The European Central Bank and financial firms such as Goldman Sachs and T. Rowe Price all referenced our forecasts in their work. The year also brought both new and returning collaborations with some of the world’s leading media organizations and authors.

-

- We worked with The Economist on their “What If” and “The World Ahead 2022” annual publications.

- The Financial Times featured our forecast on Covid-19 vaccinations on their front page and on their Covid-19 data page.

- Sky News launched an exciting current affairs challenge for the UK and beyond on our public platform GJ Open.

- Best-selling authors Tim Harford and Adam Grant also ran popular forecasting challenges.

- Adam Grant’s Think Again and Daniel Kahneman’s Noise (with coauthors Oliver Sibony and Cass R. Sunstein) published in 2021 discuss Superforecasters’ outstanding track record.

- Magazines such as Luckbox and Entrepreneur published major articles about Good Judgment and the Superforecasters.

Training Better Forecasters

Our workshops continued to attract astute business and government participants who received the best training on boosting in-house forecasting accuracy. Of all the organizations that had a workshop in 2020, more than 90% came back for more in 2021. And they were joined by many more organizations in the public and private sectors throughout the year. Many of these firms now regularly send their interns and new hires through our workshops. Capstone LLC, a global policy analysis firm with headquarters in Washington, DC, London, and Sydney, went a step further: They made our workshops the cornerstone of multi-day mandatory training sessions for all their analysts.

“This led to the adoption of [S]uperforecasting techniques across all of our research and a more rigorous measuring of all our predictions,” Capstone CEO David Barrosse wrote on the company’s blog. “Ultimately the process means better predictions, and more value for clients.”

As many in our company are themselves Superforecasters, we start any forecast about Good Judgment in 2022 by first looking back. The science of Superforecasting has shown that establishing a base rate leads to making more accurate predictions. If the developments in 2021 are a valid indication, next year will bring more exciting projects, fruitful collaborations, and effective ways to bring valuable early insight to our clients.