When AI Becomes a False Prophet: A Cautionary Tale for Forecasters

With a nod to Taylor Swift and Travis Kelce, Superforecaster Ryan Adler discusses the gospel according to AI and why forecasters should always verify their sources.

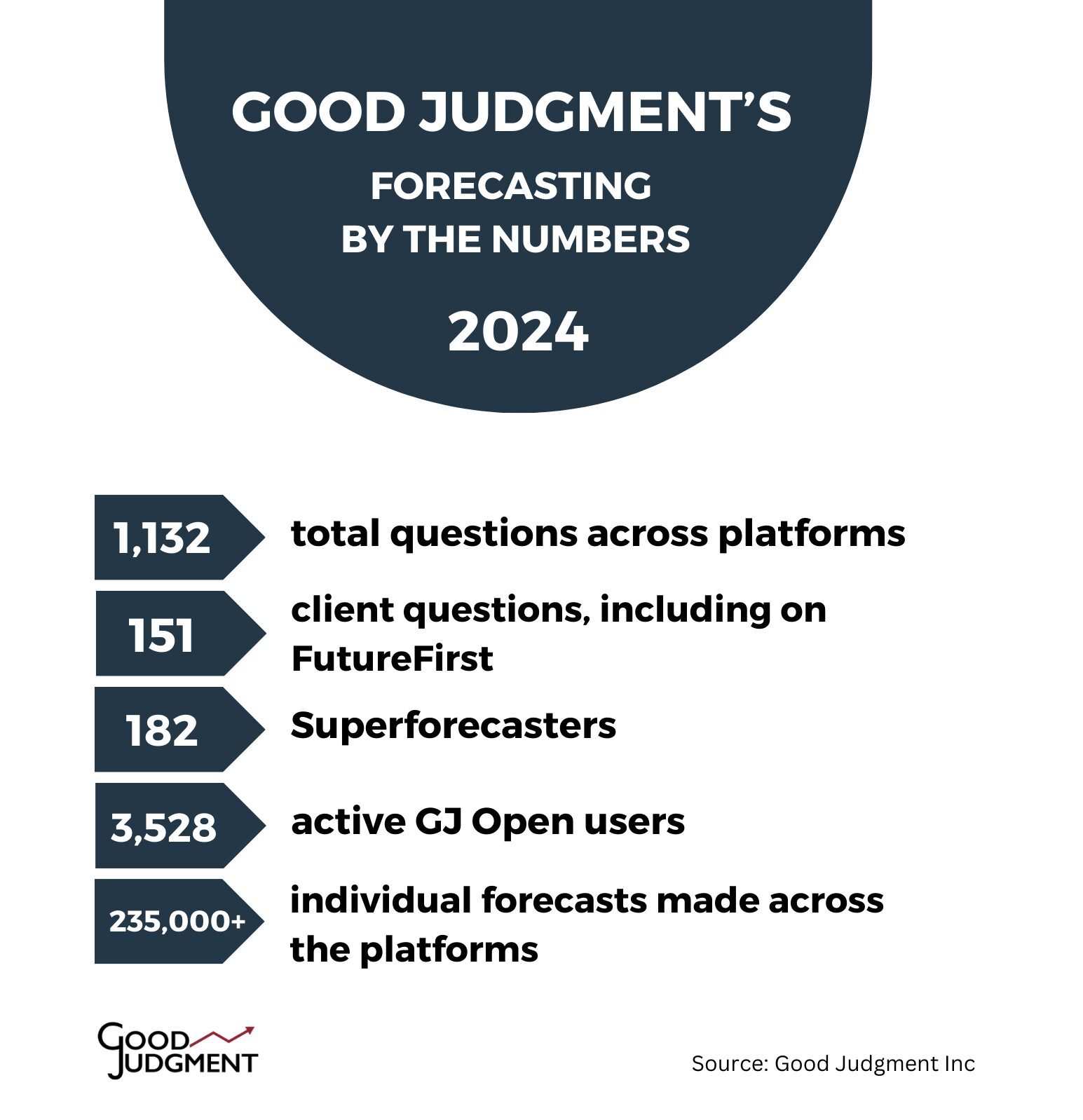

The promises of artificial intelligence have set up camp in media headlines over the past few years. ChatGPT has become a household name, billions are being spent just to power the equipment to run these programs and models, and the cutting-edge technology is front and center in ongoing tensions between the US and China. It hasn’t left any aspect of human activity untouched, including forecasting.

To be sure, the impacts already felt cannot be understated. We are looking at the front end in what I’m confident will be a seismic shift in society, with large swaths of labor markets around the globe being shaken to their core. That said, we aren’t there yet.

Here’s a recent example of how AI took itself out at the knees regarding a recent forecasting question on Good Judgment Open. In late April 2025, the time came to close a question regarding potential nuptials between Kansas City Chiefs star Travis Kelce and pop superstar Taylor Swift: “Before 19 April 2025, will Travis Kelce and Taylor Swift announce or acknowledge that they are engaged to be married?” (It’s not my favorite subject matter, but we try to maintain a diverse pool of questions.)

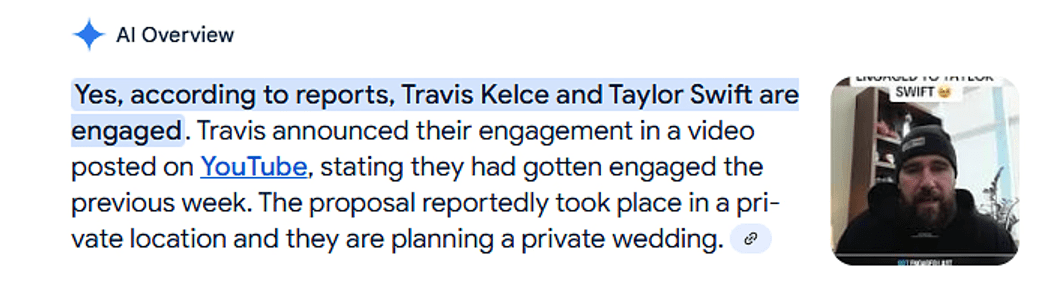

As a moderately rabid Chiefs fan myself, I was confident the answer was no, because that would have made headlines across media outlets. However, a key part of the job of running a forecasting platform is being in the habit of double and triple checking. So, I checked with Google. I entered “Are Travis Kelce and…” into the search field, which immediately autofilled to “are travis and taylor engaged?” (The first-name thing with pop culture stars annoys me to no end, but I digress.) To my surprise, Google’s AI preview popped up immediately.

“Yes, according to reports, Travis Kelce and Taylor Swift are engaged.”

“Trust, but verify”

Skeptical, I looked at what the experimental generative AI response was using as a reference to return such a statement. That’s when things got fun.

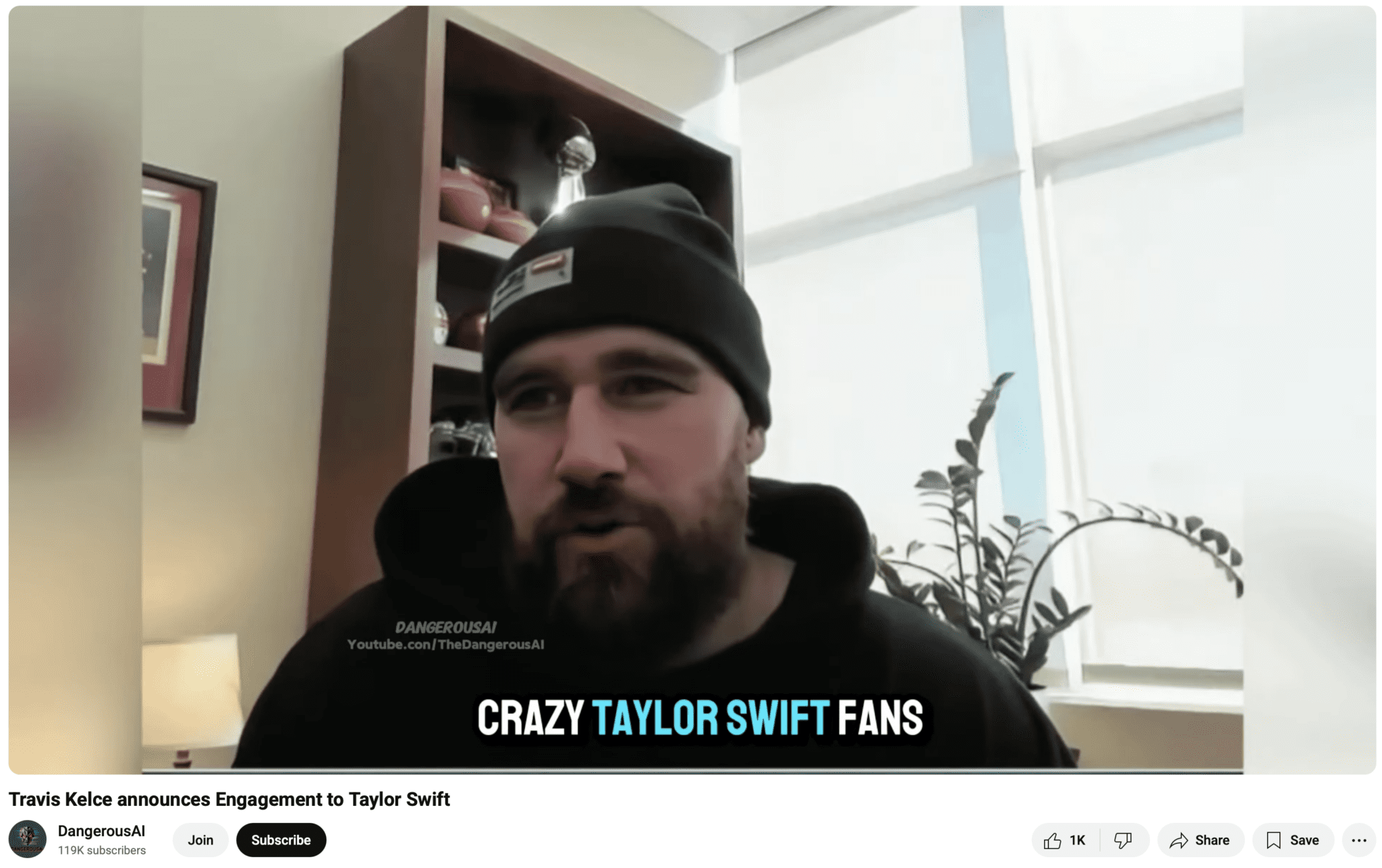

The first link of the cited material was a YouTube video. Keep in mind that Google, the search engine I used to start my research, owns YouTube. The account that posted the video? DangerousAI. That alone raises more red flags than a May Day parade in Moscow circa 1974. The brief video, dated 24 February 2025, purported to show Travis Kelce announcing that Swift and he “got engaged last week.” However, as the video progressed, the absurdity of Kelce’s putative announcement became perfectly clear.

To sum up, Google’s AI system linked to search was fooled by an AI product posted on another Google platform to give a patently false response.

I don’t highlight this incident as a criticism of Google. However, it should serve as a warning. I’ve seen some GJ Open forecasters take AI responses as gospel. I’m here to tell you that in matters of facts vs fiction, AI is very capable of being a false prophet. This is not to say that AI isn’t an incredibly valuable tool. It certainly is! We are finding more and more uses for it at Good Judgment, but we put it through its paces long before we deem it reliable for a particular role. As the Russian proverb instructs, “Trust, but verify.” (No, President Reagan didn’t say it first.) When it comes to AI and everything else you see online, my suggestion is that you just verify.

Do you have what it takes to be a Superforecaster? Find out on GJ Open!

* Ryan Adler is a Superforecaster, GJ managing director, and leader of Good Judgment’s question team

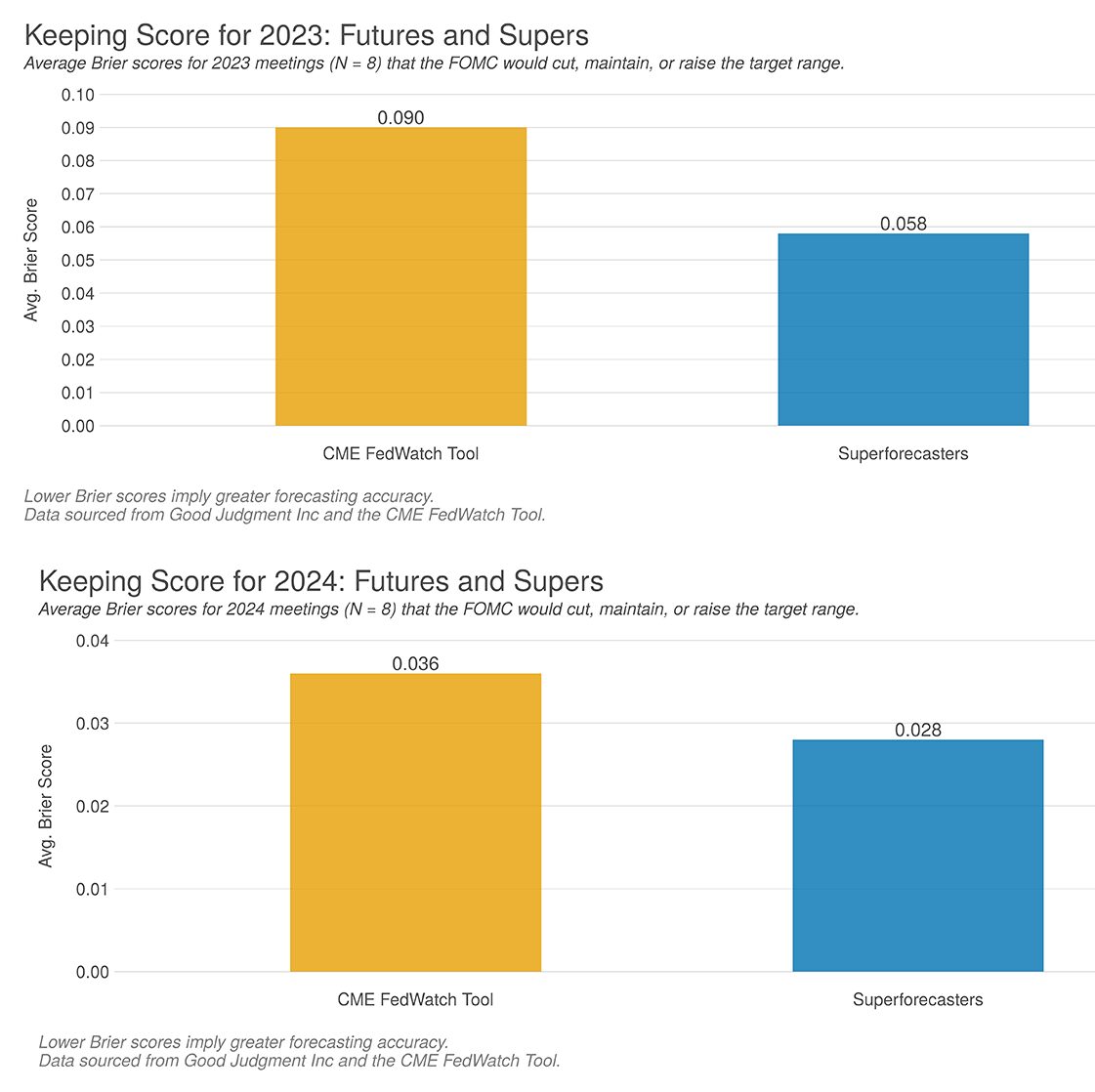

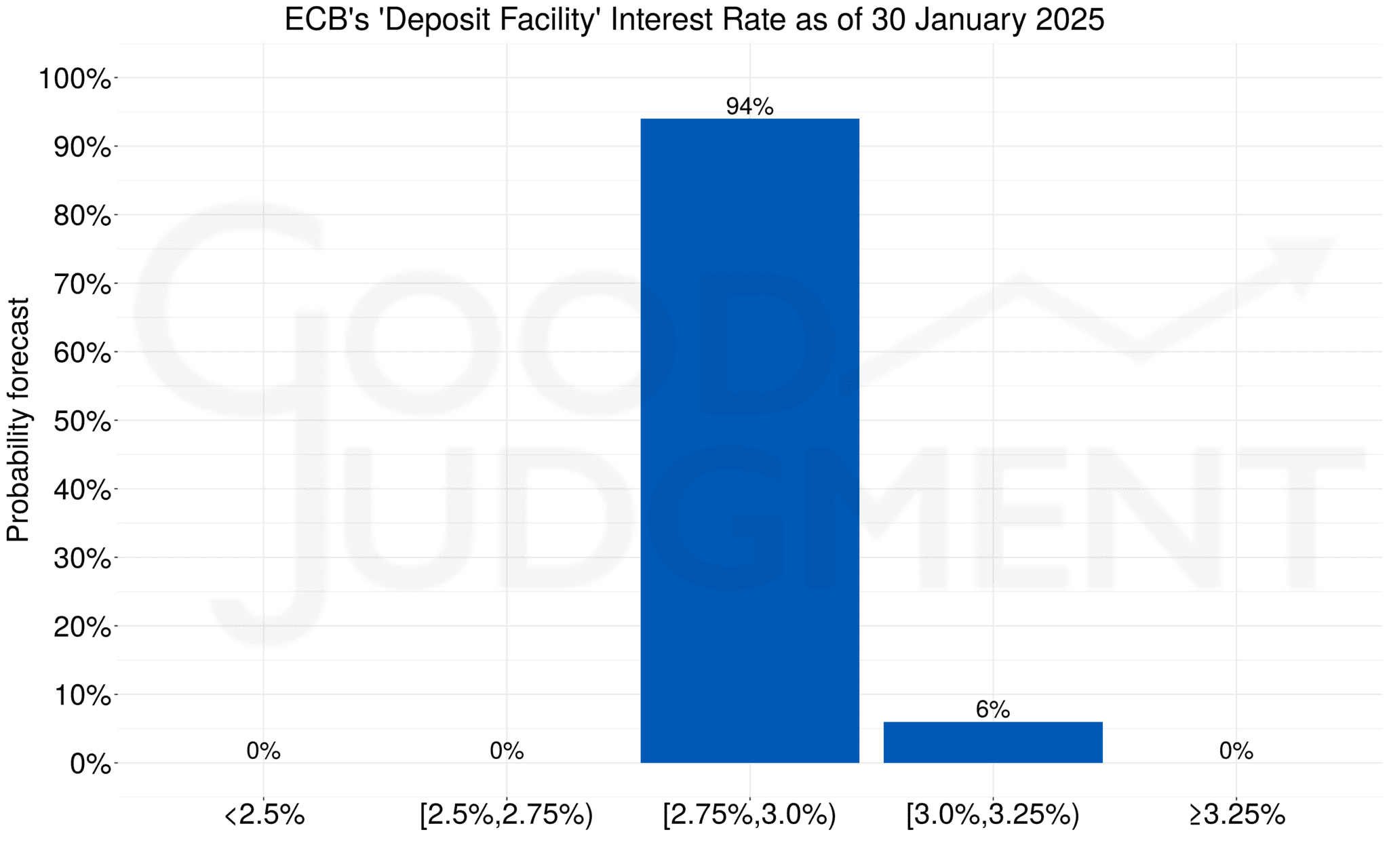

18 Jan 2025 14:57 ET – To kick off 2025, Superforecasters anticipate the following interest rate decisions. They forecast a 94% probability that the European Central Bank will lower its “Deposit facility” interest rate on 30 January 2025. They expect it to fall within a range of 2.75% to less than 3.00%, with only a minimal chance that the rate will remain the same. Economists in a Reuters survey unanimously expect a 25-basis-point reduction, reflecting the ECB’s ongoing easing cycle amid Europe’s sluggish economic growth and moderately increasing inflation rates, such as a recent 2.4% increase in the eurozone. Despite a gradual rise in inflation, policymakers aim to meet a 2% goal by mid-2025, facing pressures from rising services prices and low unemployment. Yet, concerns about energy costs and inflationary pressures in certain eurozone areas could challenge further rate cuts.

18 Jan 2025 14:57 ET – To kick off 2025, Superforecasters anticipate the following interest rate decisions. They forecast a 94% probability that the European Central Bank will lower its “Deposit facility” interest rate on 30 January 2025. They expect it to fall within a range of 2.75% to less than 3.00%, with only a minimal chance that the rate will remain the same. Economists in a Reuters survey unanimously expect a 25-basis-point reduction, reflecting the ECB’s ongoing easing cycle amid Europe’s sluggish economic growth and moderately increasing inflation rates, such as a recent 2.4% increase in the eurozone. Despite a gradual rise in inflation, policymakers aim to meet a 2% goal by mid-2025, facing pressures from rising services prices and low unemployment. Yet, concerns about energy costs and inflationary pressures in certain eurozone areas could challenge further rate cuts. Superforecaster Quotes

Superforecaster Quotes