Good Judgment’s 2024 in Review

Superforecasters always keep score. As we turn the page to 2025 at Good Judgment Inc, we look back at 2024 for highlights, statistics, and key developments.

Superforecasters always keep score. As we turn the page to 2025 at Good Judgment Inc, we look back at 2024 for highlights, statistics, and key developments.

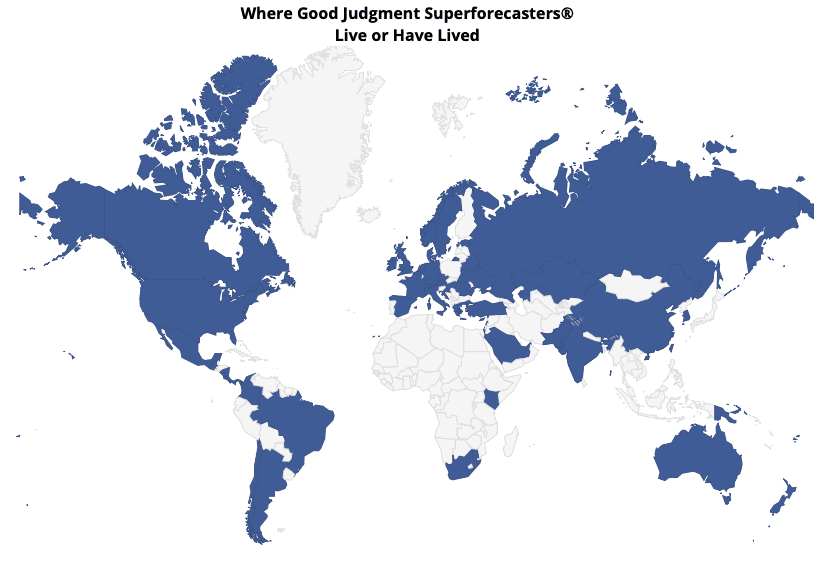

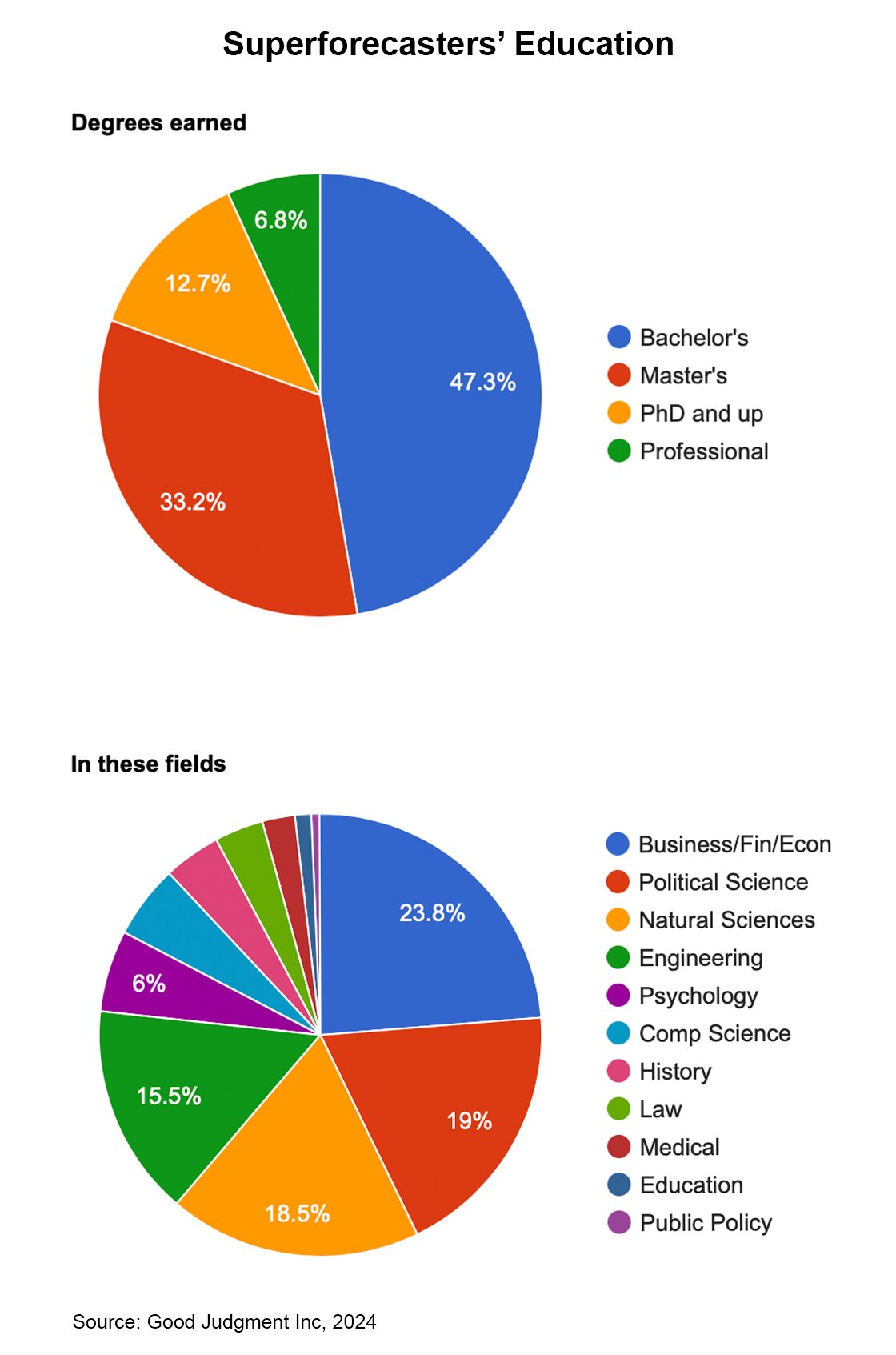

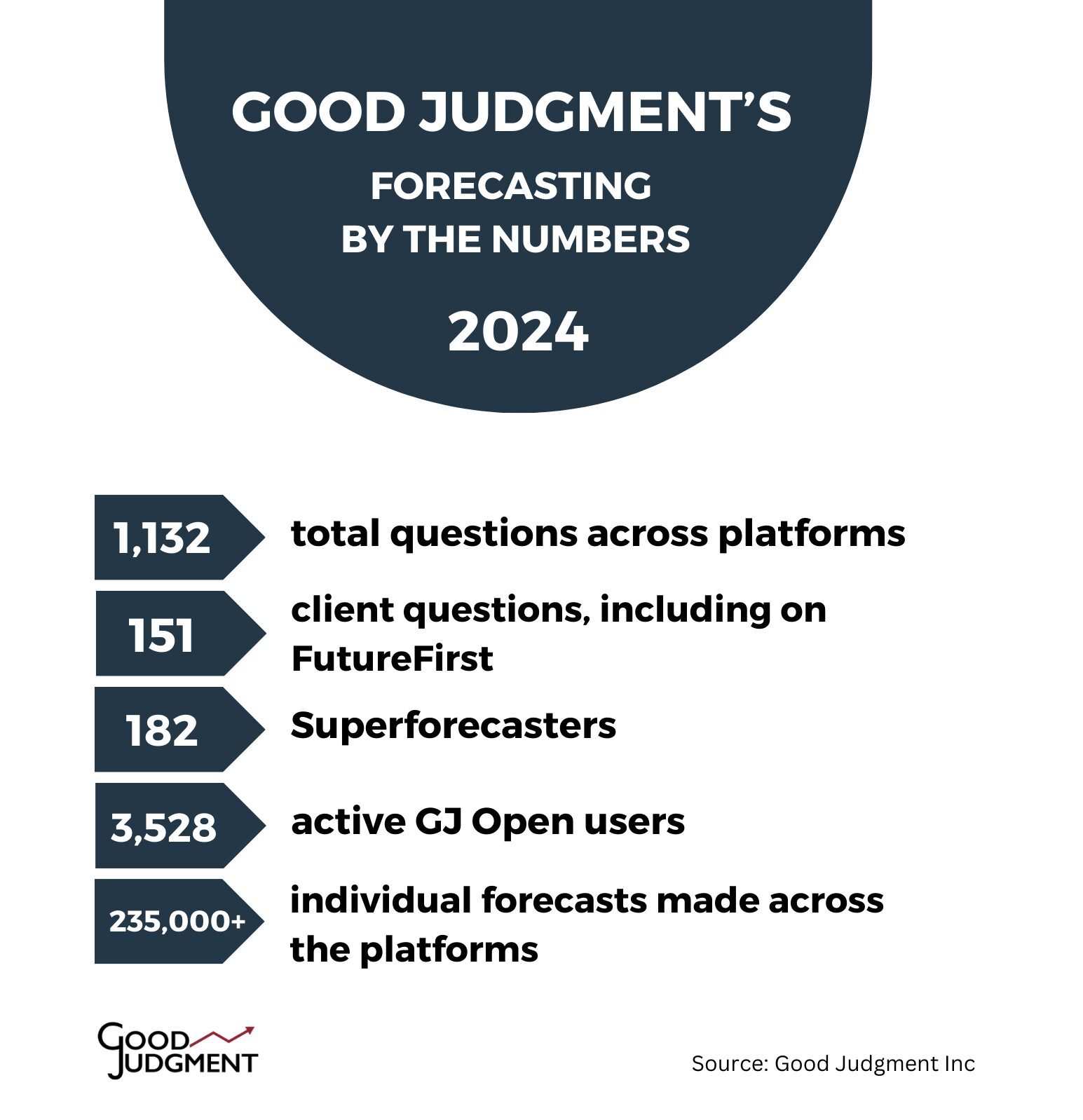

What a year it was! From monetary policy shifts to volatile election outcomes, our Superforecasters tackled some of the most complex forecasting questions to date. Good Judgment’s clients and FutureFirst™ subscribers posed 151 questions to the Superforecasters on our proprietary platform, with a total of 1,132 forecasting questions live in 2024 across our platforms, including on GJ Open, our public forecasting site.

Forecasting Highlights

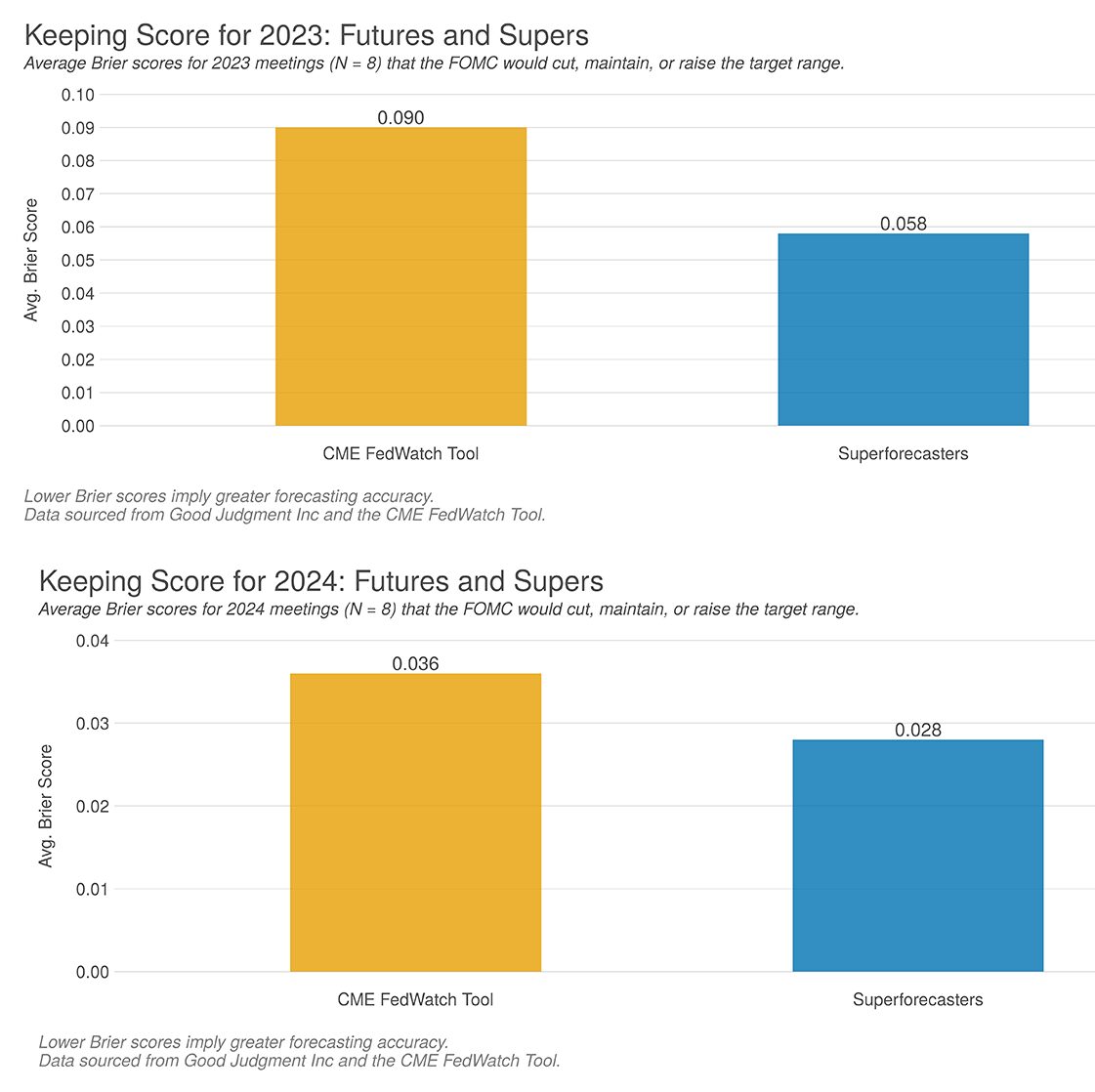

Good Judgment’s Superforecasters continued to outperform financial markets in 2024. This caught the eye of, among others, Financial Times reporters.

“A group of lay people with a talent for forecasting have consistently outperformed financial markets in predicting the Fed’s next move,” wrote data journalist Joel Suss for FT’s Monetary Policy Radar in July 2024. For the year as a whole, the Superforecasters outperformed the futures markets by 22%.

Our partnership with ForgeFront and the UK Government’s Futures Procurement Network continued to grow in 2024. In November, Good Judgment’s CEO Dr. Warren Hatch spoke remotely at the Department for Environment, Food and Rural Affairs (DEFRA) Futures Trend Briefing, discussing the role of Superforecasting in supporting the UK’s Biological Security Strategy.

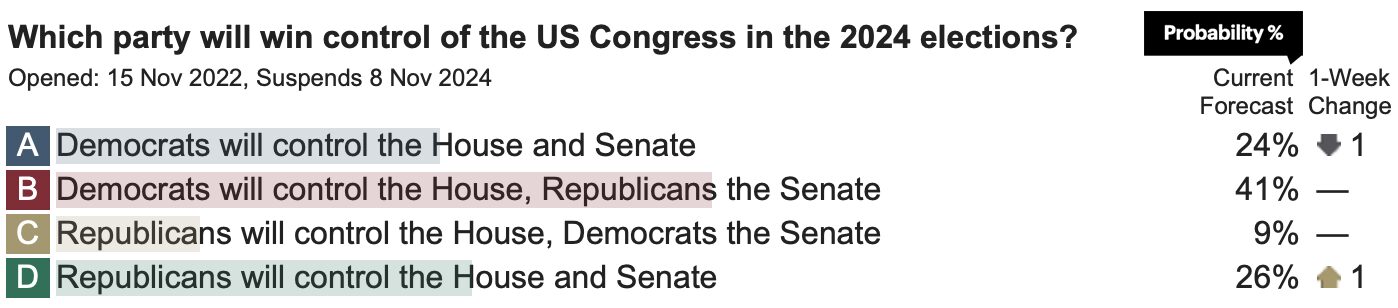

We also saw an increased interest in subscriptions to specialized forecast channels on FutureFirst, including the “Superforecasting the Middle East” and “Superforecasting US Politics” channels. Individual FutureFirst channels provide access to a range of forecasting questions grouped by theme or topical focus and, like the all-inclusive subscription option, come with daily forecast updates and API access.

Media and Research

Throughout the past year, Good Judgment continued partnering with leading media organizations. Notably, The Economist once again featured our Superforecasters’ outlook in “The World Ahead 2025.” The focus for this year was on US tariffs, elections in Germany, Canada, and Australia, China’s inflation, and the Russia-Ukraine conflict. Our Superforecasters were also referenced in The New York Times, Wired, and Vox, among other media outlets. (See our Press page for the full list.)

Our research partners at the Forecasting Research Institute continued exploring the applications of Superforecasting in approaching key questions, from AI forecasting capabilities to nuclear risk. The institute’s ForecastBench, released on 1 October 2024, revealed that the top-performing large language models (LLMs) lagged behind Superforecasters by 19%. (See our Science of Superforecasting page for all relevant scientific publications.)

Private and Public Challenges on GJ Open

GJ Open continued partnering with media, businesses, and educational institutions to host public and private forecasting challenges on their behalf. Our public challenges included those from UBS Asset Management Investments, Man Group, Fujitsu, City University of Hong Kong, Harvard Kennedy School, and The Economist. We also hosted private challenges for our partners to help them identify in-house forecasting talent and train interns and staff in probabilistic thinking and accountable decision-making. We supplied the tools, a secure platform, data analysis, and Superforecasters’ feedback.

Training

As we continued to provide forecasting training to professional teams and individuals, we saw an increase in virtual as well as in-person workshops, seminars, and presentations, which were conducted for hundreds of individuals in dozens of government, non-profit, and private-sector organizations across the United States and abroad, including in the UK, the Netherlands, and Turkey.

We’re excited to continue this journey in 2025 and wish everyone a Happy New Year. May it be a year of thoughtful forecasts and better decisions!