A Strong Year for Good Judgment Inc: 2022 in Review and Outlook

What a year! With Russia’s invasion of Ukraine, protests in Iran, rising tensions in the Taiwan Strait, and inflation pressures around the world, 2022 was the busiest year yet at Good Judgment. Our esteemed clients and FutureFirst™ subscribers in the private sector, government, and non-profit organizations posed a record 181 questions to the professional Superforecasters. More than 80 questions resolved and were scored in 2022. We launched an additional 422 questions on Good Judgment Open, our public forecasting site and primary training ground for future Superforecasters.

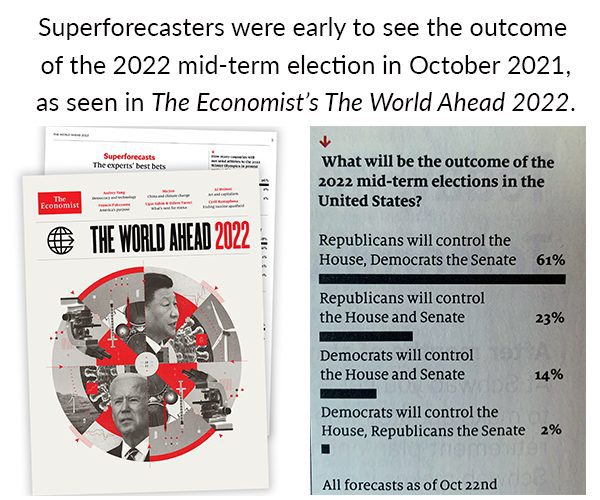

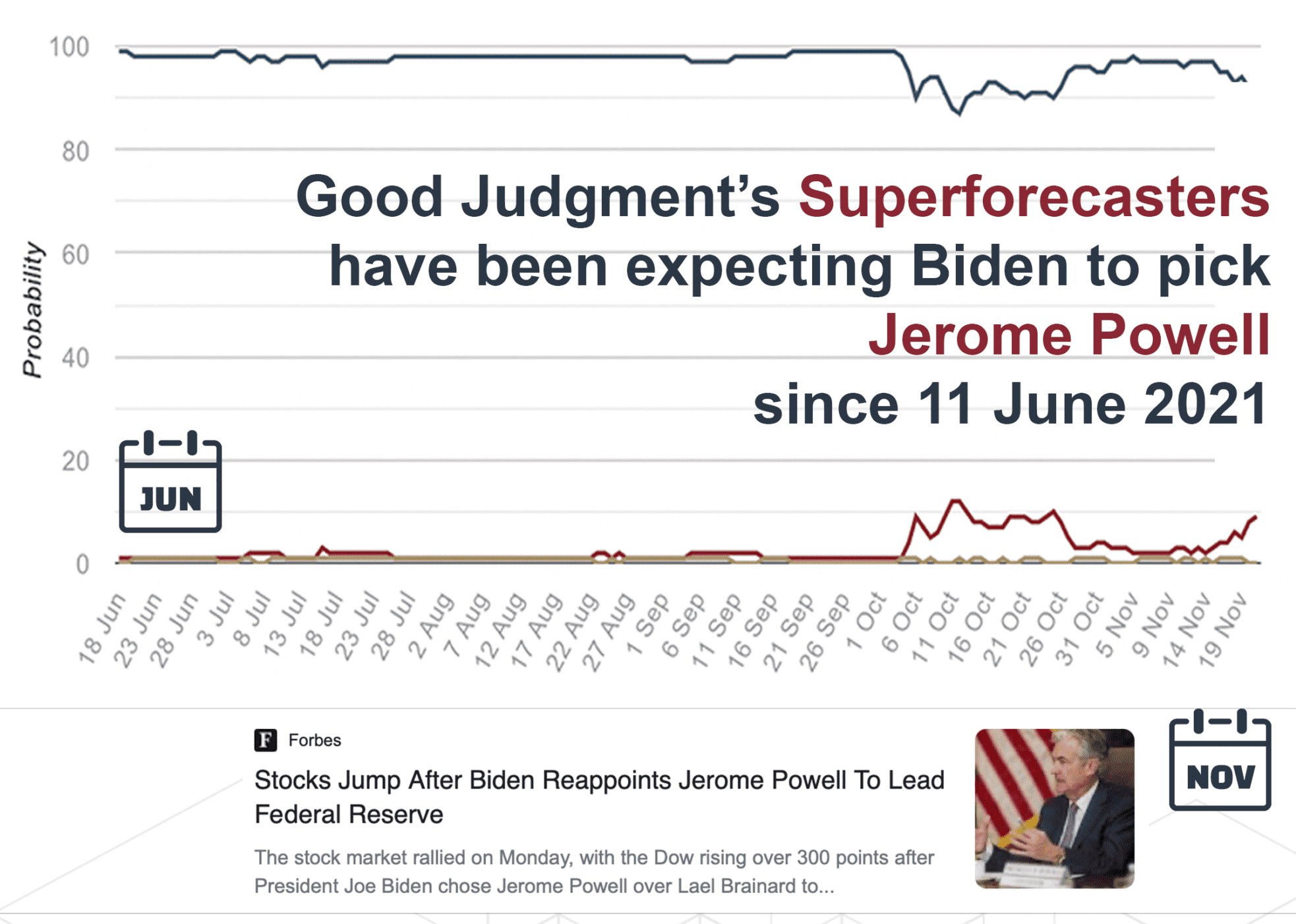

At the start of 2022, our Superforecasters called the 2022 US midterm election, as can be seen in The Economist’s The World Ahead publication, an ongoing collaboration that showcases how “data-driven approaches are becoming popular in all kinds of journalism.” Other appearances of Good Judgment and Superforecasting in the press and news can be found here. The Superforecasters also nailed the forecasts on Jerome Powell’s renomination to head the Federal Reserve and on the Tokyo Olympics. Looking ahead, their forecasts focus on the Russia-Ukraine conflict, tensions around Taiwan, global economy, and key elections in 2023 (many of which are featured in The World Ahead 2023).

Here are some of the other key developments and projects we worked on in the past year:

We launched an updated version of our subscription-based forecast monitor, FutureFirst. In addition to a brand-new interface, the monitor has been equipped with the following features:

-

- Forecast Channels: Questions are grouped by theme or topical focus. Some of the current channels are US Elections, Ukraine, Geopolitics, Economics, Policy, Markets, China, and the Federal Reserve. These are available as part of FutureFirst or as standalone subscriptions.

- Implied Medians: In addition to probability ranges, for specific questions FutureFirst now includes a median as part of a continuous probability distribution.

- API Access: FutureFirst REST API enables clients’ programs to retrieve daily updated forecast data for use in their models. Separate entry points deliver Question data, Forecast data, and Forecast Distribution data (for applicable questions). Data can be provided in JSON or CSV formats.

We have further refined our question cluster methodology to illuminate complex topics with a diverse set of discrete questions which themselves can be valuable but taken together provide decision makers with a robust real-time monitoring tool.

We expanded our workshops to include advanced training sessions on question generation and low probability/high impact “gray swan” events. We resumed offering in-person workshops in 2022 even as we continued to offer virtual trainings for teams scattered across the globe—with an average Net Promoter Score of 71 across all sessions, above Apple. Of all the organizations that had a workshop in 2021, more than 90% came back for more in 2022, now regularly sending their interns and new hires through our training.

The US military continues to lead the way: Superforecasting training has been part of the curriculum for senior officers since 2019, and Good Judgment has been delivering semester-long courses at National Defense University in Washington, DC, continuously since the start of 2020. Over the same period, civil servants and military officers from Dubai have been forecasting during and after our annual two week-training courses.

We launched the Forecasting Aptitude Survey Tool, a screening tool that measures characteristics that Good Judgment’s research found correlate with subsequent forecasting accuracy. It’s been an integral part of our workshops for years, and we’re now pleased to provide it to organizations looking for an additional input to evaluate their existing or prospective staff.

To help advance decision-making skills among high school students, Good Judgment partnered with the Alliance for Decision Education to launch a pilot forecasting challenge for selected schools across the United States. We are continuing and expanding this project in 2023. At the other end of the education spectrum, we collaborated with the Kennedy School of Government at Harvard University in a semester-long course that honed the forecasting skills of top graduate students.

With climate change becoming a growing concern worldwide, we partnered with adelphi, a leading European think tank for climate, environment, and development, to produce “Seven questions for the G7: Superforecasting climate-fragility risks for the coming decade,” published in 2022. Commissioned by the multilaterally backed climate security initiative Weathering Risk, it is the first report of its kind that applies the Superforecasting methodology to climate-related risks to peace and stability. We also partnered with Dr. William MacAskill, the author of the groundbreaking 2022 book What We Owe the Future, supplying 22 additional forecasts on the long-term impacts of climate change.

Finally, at the end of 2022, Good Judgment’s co-founder Philip Tetlock launched the non-profit Forecasting Research Institute, an exciting additional venture that will advance the frontiers of forecasting for better decisions. We look forward to contributing to their efforts in 2023—and beyond.

It’s already shaping up to be another exciting year of uncertainty in 2023, with gridlock in Washington, rising geopolitical tensions, and fierce global economic headwinds. We look forward to those challenges and contributing our forecasts and insights with the help of a phenomenal staff at Good Judgment Inc, the unrivalled skills of our professional Superforecasters, and the support of our expanding roster of clients and partners around the world.

Please sign up for our newsletter to keep up with our news in 2023.